Nick Brown and I just lately printed this paper, How statistical challenges and misreadings of the literature combineto produce unreplicable science: An instance from psychology, within the journal, Advances in Strategies and Practices in Psychological Science, a few printed article that claimed to seek out that therapeutic of bruises could possibly be sped or slowed by manipulating folks’s subjective sense of time. The article underneath dialogue had main issues–together with weak idea, a flawed information evaluation, and a number of errors in summarizing the literature–to the extent that we didn’t discover their claims to be supported by their proof.

That type of factor occurs–we name it on a regular basis, bread-and-butter pseudoscience. The purpose of the brand new paper by Nick and me was not a lot to shoot down one explicit declare of mind-body therapeutic however quite to discover some common causes for scientific error and overconfidence and to offer a template for future researchers to keep away from these issues sooner or later.

We posted this the opposite day on the weblog (underneath the heading, 7 steps to junk science that may obtain worldly success), and one thing got here up in feedback, one thing I hadn’t considered earlier than and I needed to share with you. The commenter, Jean-Paul, pointed to a misunderstanding that may come up when becoming multilevel fashions utilizing some software program.

Want for multilevel modeling or some equal adjustment for clustering in information

Right here was the issue. The article in query estimated therapy results with a cluster design: they’d three remedies utilized to 33 analysis individuals (in psychology jargon, “topics”) with information supplied by 25 raters. The overall variety of measurements was 2425 (not fairly 3*33*25 due to some lacking information, which isn’t the purpose on this story).

Once you wish to estimate therapy results from a clustered design, you should match a multilevel mannequin or make some equal adjustment. In any other case your customary errors will likely be too small. On this case you must embrace results for individuals (some have extra distinguished bruises than others on this research) and for raters (who may have systematic variations in how they characterize the severity of a bruise utilizing a numerical ranking).

The researchers knew to do that, in order that they match a multilevel mannequin. Happily for me, they used the R program lmer, which I’m accustomed to. Right here’s the output, which for readability I present utilizing the show operate within the arm package deal:

lmer(components = Therapeutic ~ Situation + (1 | Topic) + (1 | ResponseId),

information = DFmodel, REML = FALSE)

coef.est coef.se

(Intercept) 6.20 0.31

Condition28 0.23 0.09

Condition56 1.05 0.09

Error phrases:

Teams Identify Std.Dev.

Topic (Intercept) 1.07

ResponseId (Intercept) 1.22

Residual 1.87

---

variety of obs: 2425, teams: Topic, 33; ResponseId, 25

AIC = 10131.4, DIC = 10119.4

deviance = 10119.4

So, nice. As anticipated, participant and rater results are massive–they differ by greater than the magnitudes of the estimated therapy results!–so it’s a superb factor we adjusted for them. And the primary results are a surprising 2.4 and 11.1 customary errors away from zero, a giant win!

Want for various slopes, not simply various intercepts

However . . . wait a minute. The purpose of this evaluation is not only to estimate common responses; it’s to estimate therapy results. And, for that, we have to enable the therapy results to differ by participant and rater–in multilevel modeling phrases, we needs to be permitting slopes in addition to intercepts to differ. That is in accordance with the well-known common precept of the design and evaluation of experiments that the error time period for any comparability needs to be on the degree of study of the comparability.

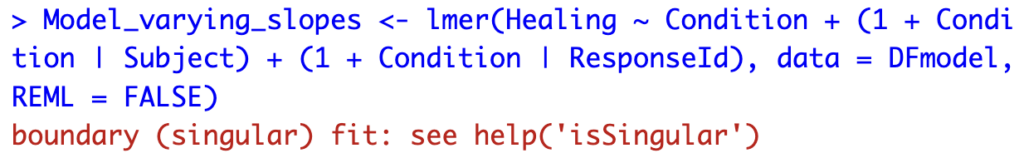

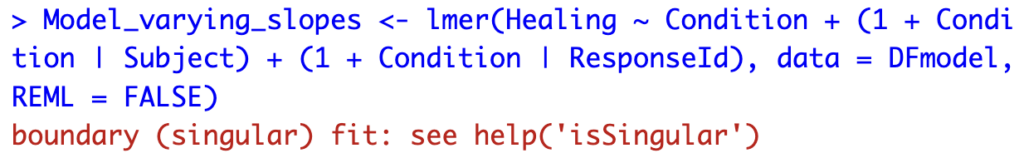

No downside, lmer can try this, and we accomplish that! right here’s the consequence:

lmer(components = Therapeutic ~ Situation + (1 + Situation | Topic) +

(1 + Situation | ResponseId), information = DFmodel, REML = FALSE)

coef.est coef.se

(Intercept) 6.18 0.39

Condition28 0.25 0.36

Condition56 1.09 0.37

Error phrases:

Teams Identify Std.Dev. Corr

Topic (Intercept) 1.71

Condition28 1.99 -0.71

Condition56 2.03 -0.72 0.65

ResponseId (Intercept) 1.24

Condition28 0.07 1.00

Condition56 0.13 -1.00 -1.00

Residual 1.51

---

variety of obs: 2425, teams: Topic, 33; ResponseId, 25

AIC = 9317.9, DIC = 9285.9

deviance = 9285.9

The estimated common therapy results have barely modified, however the usual errors are a lot greater. The fitted fashions reveals that therapy results differ so much by participant, not a lot by rater.

You is likely to be completely satisfied as a result of the t-statistic for one of many therapy comparisons continues to be 3 customary errors away from zero, however different issues stay, each with a number of comparisons and with the interpretation of the info, as we talk about in part 2.4 of our paper.

There’s one factor you may discover for those who look rigorously on the above output, which is that the estimated covariance matrix for the rater results is degenerate: the correlations are at 1 and -1. This type of factor occurs so much with multilevel fashions when information are noisy and the variety of teams is small. It’s a difficulty we mentioned in this paper from 2014.

In apply, it’s no massive deal right here: the degeneracy is a consequence of a really noisy estimate, which itself arises as a result of the noise within the information is way bigger than the sign, which itself arises as a result of the variation in therapy results throughout raters is indistinguishable from zero (take a look at these small estimated customary deviations of the various slopes for rater). However that’s all fairly delicate, not one thing that’s in any textbook, even ours!

That scary warning on the pc

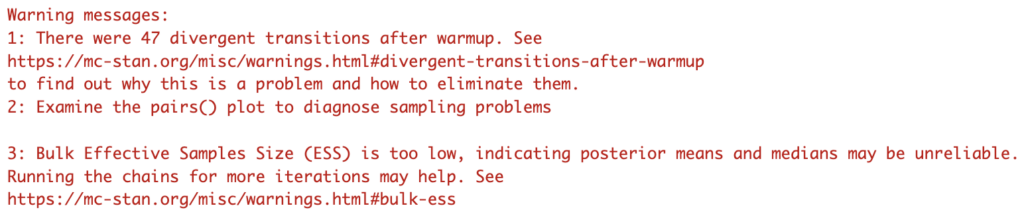

Right here’s the issue. Once you match that various slopes mannequin, R shows a warning in scary pink kind:

It’s potential that the researchers tried this varying-slope match, noticed the singularity, received scared, and retreated to the easier varying-intercept mannequin, which had the incidental advantage of giving them an estimated therapy impact that was 11 customary error from zero.

Simply to be clear, I’m not saying this can be a downside with R, or with lme4. Certainly, for those who kind assist(“isSingular”) within the R console, you’ll get some affordable recommendation, none of which is to throw out all of the various slopes. However the first choice urged there may be to “keep away from becoming overly advanced fashions within the first place,” which might be misunderstood by customers.

If you happen to match the mannequin utilizing stan_lmer on its default settings, all the things works nice:

household: gaussian [identity]

components: Therapeutic ~ Situation + (1 + Situation | Topic) + (1 + Situation |

ResponseId)

observations: 2425

------

Median MAD_SD

(Intercept) 6.22 0.40

Condition28 0.26 0.34

Condition56 1.09 0.37

Auxiliary parameter(s):

Median MAD_SD

sigma 1.51 0.02

Error phrases:

Teams Identify Std.Dev. Corr

Topic (Intercept) 1.731

Condition28 2.015 -0.63

Condition56 2.056 -0.66 0.57

ResponseId (Intercept) 1.225

Condition28 0.158 0.33

Condition56 0.172 -0.51 0.04

Residual 1.509

Num. ranges: Topic 33, ResponseId 25

These estimates common over the posterior distribution, so nothing on the boundary, no downside. And, no shock, the estimates and customary errors of the therapy results are principally unchanged.

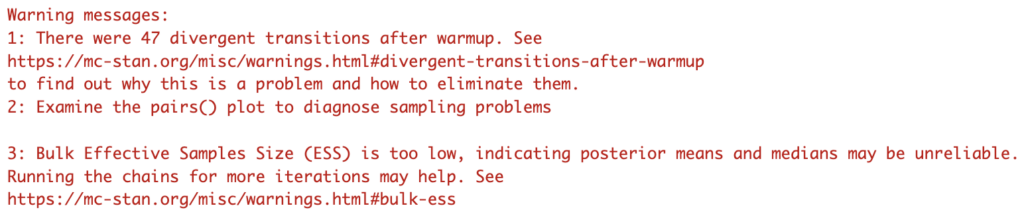

That stated, if you run stan_lmer, it offers warnings in pink too!

These warning messages are a giant downside! On one hand, yeah, you wish to warn folks; then again, it might be simply horrible if the warnings are so scary that they ship customers to make use of unhealthy fashions that don’t occur to spit out warnings.

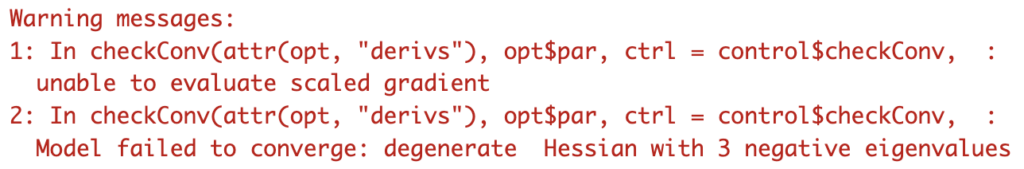

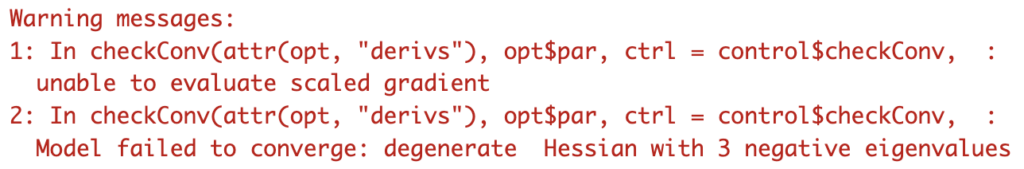

I additionally tried becoming the mannequin utilizing blmer, which I believed would work nice, however that produced some warning messages that scared even me:

By default, blme makes use of a degeneracy-avoiding prior, so this type of factor shouldn’t occur in any respect. We must always work out what’s occurring right here!

Abstract

1. If you’re estimating therapy results utilizing clustered information, try to be becoming a multilevel mannequin with various intercepts and slopes (or do the equal adjustment in another approach, for instance by boostrapping in keeping with the cluster construction). Various intercepts isn’t sufficient. If you happen to do it improper, your customary errors may be approach off. That is all in keeping with the final precept to make use of all design data within the evaluation.

2. If you happen to apply a extra difficult technique on the pc and it offers you a warning message, this does not imply that you must return to a less complicated technique. Extra difficult fashions may be more durable to suit. That is one thing you might need to cope with. If it actually bothers you, then be extra cautious in your information assortment; you possibly can design research that may be analyzed extra merely. Or you are able to do some averaging of your information, which could lose some statistical effectivity however will mean you can use easier statistical strategies (for instance, see part 2.3, “Easy paired-comparisons evaluation,” of our paper).

Nick Brown and I just lately printed this paper, How statistical challenges and misreadings of the literature combineto produce unreplicable science: An instance from psychology, within the journal, Advances in Strategies and Practices in Psychological Science, a few printed article that claimed to seek out that therapeutic of bruises could possibly be sped or slowed by manipulating folks’s subjective sense of time. The article underneath dialogue had main issues–together with weak idea, a flawed information evaluation, and a number of errors in summarizing the literature–to the extent that we didn’t discover their claims to be supported by their proof.

That type of factor occurs–we name it on a regular basis, bread-and-butter pseudoscience. The purpose of the brand new paper by Nick and me was not a lot to shoot down one explicit declare of mind-body therapeutic however quite to discover some common causes for scientific error and overconfidence and to offer a template for future researchers to keep away from these issues sooner or later.

We posted this the opposite day on the weblog (underneath the heading, 7 steps to junk science that may obtain worldly success), and one thing got here up in feedback, one thing I hadn’t considered earlier than and I needed to share with you. The commenter, Jean-Paul, pointed to a misunderstanding that may come up when becoming multilevel fashions utilizing some software program.

Want for multilevel modeling or some equal adjustment for clustering in information

Right here was the issue. The article in query estimated therapy results with a cluster design: they’d three remedies utilized to 33 analysis individuals (in psychology jargon, “topics”) with information supplied by 25 raters. The overall variety of measurements was 2425 (not fairly 3*33*25 due to some lacking information, which isn’t the purpose on this story).

Once you wish to estimate therapy results from a clustered design, you should match a multilevel mannequin or make some equal adjustment. In any other case your customary errors will likely be too small. On this case you must embrace results for individuals (some have extra distinguished bruises than others on this research) and for raters (who may have systematic variations in how they characterize the severity of a bruise utilizing a numerical ranking).

The researchers knew to do that, in order that they match a multilevel mannequin. Happily for me, they used the R program lmer, which I’m accustomed to. Right here’s the output, which for readability I present utilizing the show operate within the arm package deal:

lmer(components = Therapeutic ~ Situation + (1 | Topic) + (1 | ResponseId),

information = DFmodel, REML = FALSE)

coef.est coef.se

(Intercept) 6.20 0.31

Condition28 0.23 0.09

Condition56 1.05 0.09

Error phrases:

Teams Identify Std.Dev.

Topic (Intercept) 1.07

ResponseId (Intercept) 1.22

Residual 1.87

---

variety of obs: 2425, teams: Topic, 33; ResponseId, 25

AIC = 10131.4, DIC = 10119.4

deviance = 10119.4

So, nice. As anticipated, participant and rater results are massive–they differ by greater than the magnitudes of the estimated therapy results!–so it’s a superb factor we adjusted for them. And the primary results are a surprising 2.4 and 11.1 customary errors away from zero, a giant win!

Want for various slopes, not simply various intercepts

However . . . wait a minute. The purpose of this evaluation is not only to estimate common responses; it’s to estimate therapy results. And, for that, we have to enable the therapy results to differ by participant and rater–in multilevel modeling phrases, we needs to be permitting slopes in addition to intercepts to differ. That is in accordance with the well-known common precept of the design and evaluation of experiments that the error time period for any comparability needs to be on the degree of study of the comparability.

No downside, lmer can try this, and we accomplish that! right here’s the consequence:

lmer(components = Therapeutic ~ Situation + (1 + Situation | Topic) +

(1 + Situation | ResponseId), information = DFmodel, REML = FALSE)

coef.est coef.se

(Intercept) 6.18 0.39

Condition28 0.25 0.36

Condition56 1.09 0.37

Error phrases:

Teams Identify Std.Dev. Corr

Topic (Intercept) 1.71

Condition28 1.99 -0.71

Condition56 2.03 -0.72 0.65

ResponseId (Intercept) 1.24

Condition28 0.07 1.00

Condition56 0.13 -1.00 -1.00

Residual 1.51

---

variety of obs: 2425, teams: Topic, 33; ResponseId, 25

AIC = 9317.9, DIC = 9285.9

deviance = 9285.9

The estimated common therapy results have barely modified, however the usual errors are a lot greater. The fitted fashions reveals that therapy results differ so much by participant, not a lot by rater.

You is likely to be completely satisfied as a result of the t-statistic for one of many therapy comparisons continues to be 3 customary errors away from zero, however different issues stay, each with a number of comparisons and with the interpretation of the info, as we talk about in part 2.4 of our paper.

There’s one factor you may discover for those who look rigorously on the above output, which is that the estimated covariance matrix for the rater results is degenerate: the correlations are at 1 and -1. This type of factor occurs so much with multilevel fashions when information are noisy and the variety of teams is small. It’s a difficulty we mentioned in this paper from 2014.

In apply, it’s no massive deal right here: the degeneracy is a consequence of a really noisy estimate, which itself arises as a result of the noise within the information is way bigger than the sign, which itself arises as a result of the variation in therapy results throughout raters is indistinguishable from zero (take a look at these small estimated customary deviations of the various slopes for rater). However that’s all fairly delicate, not one thing that’s in any textbook, even ours!

That scary warning on the pc

Right here’s the issue. Once you match that various slopes mannequin, R shows a warning in scary pink kind:

It’s potential that the researchers tried this varying-slope match, noticed the singularity, received scared, and retreated to the easier varying-intercept mannequin, which had the incidental advantage of giving them an estimated therapy impact that was 11 customary error from zero.

Simply to be clear, I’m not saying this can be a downside with R, or with lme4. Certainly, for those who kind assist(“isSingular”) within the R console, you’ll get some affordable recommendation, none of which is to throw out all of the various slopes. However the first choice urged there may be to “keep away from becoming overly advanced fashions within the first place,” which might be misunderstood by customers.

If you happen to match the mannequin utilizing stan_lmer on its default settings, all the things works nice:

household: gaussian [identity]

components: Therapeutic ~ Situation + (1 + Situation | Topic) + (1 + Situation |

ResponseId)

observations: 2425

------

Median MAD_SD

(Intercept) 6.22 0.40

Condition28 0.26 0.34

Condition56 1.09 0.37

Auxiliary parameter(s):

Median MAD_SD

sigma 1.51 0.02

Error phrases:

Teams Identify Std.Dev. Corr

Topic (Intercept) 1.731

Condition28 2.015 -0.63

Condition56 2.056 -0.66 0.57

ResponseId (Intercept) 1.225

Condition28 0.158 0.33

Condition56 0.172 -0.51 0.04

Residual 1.509

Num. ranges: Topic 33, ResponseId 25

These estimates common over the posterior distribution, so nothing on the boundary, no downside. And, no shock, the estimates and customary errors of the therapy results are principally unchanged.

That stated, if you run stan_lmer, it offers warnings in pink too!

These warning messages are a giant downside! On one hand, yeah, you wish to warn folks; then again, it might be simply horrible if the warnings are so scary that they ship customers to make use of unhealthy fashions that don’t occur to spit out warnings.

I additionally tried becoming the mannequin utilizing blmer, which I believed would work nice, however that produced some warning messages that scared even me:

By default, blme makes use of a degeneracy-avoiding prior, so this type of factor shouldn’t occur in any respect. We must always work out what’s occurring right here!

Abstract

1. If you’re estimating therapy results utilizing clustered information, try to be becoming a multilevel mannequin with various intercepts and slopes (or do the equal adjustment in another approach, for instance by boostrapping in keeping with the cluster construction). Various intercepts isn’t sufficient. If you happen to do it improper, your customary errors may be approach off. That is all in keeping with the final precept to make use of all design data within the evaluation.

2. If you happen to apply a extra difficult technique on the pc and it offers you a warning message, this does not imply that you must return to a less complicated technique. Extra difficult fashions may be more durable to suit. That is one thing you might need to cope with. If it actually bothers you, then be extra cautious in your information assortment; you possibly can design research that may be analyzed extra merely. Or you are able to do some averaging of your information, which could lose some statistical effectivity however will mean you can use easier statistical strategies (for instance, see part 2.3, “Easy paired-comparisons evaluation,” of our paper).