Editor’s observe: This text, initially revealed on Nov. 15, 2023, has been up to date.

To grasp the most recent developments in generative AI, think about a courtroom.

Judges hear and determine circumstances based mostly on their basic understanding of the regulation. Typically a case — like a malpractice swimsuit or a labor dispute — requires particular experience, so judges ship court docket clerks to a regulation library, in search of precedents and particular circumstances they will cite.

Like a great decide, giant language fashions (LLMs) can reply to all kinds of human queries. However to ship authoritative solutions — grounded in particular court docket proceedings or comparable ones — the mannequin must be supplied that info.

The court docket clerk of AI is a course of known as retrieval-augmented era, or RAG for brief.

How It Obtained Named ‘RAG’

Patrick Lewis, lead creator of the 2020 paper that coined the time period, apologized for the unflattering acronym that now describes a rising household of strategies throughout lots of of papers and dozens of economic providers he believes characterize the way forward for generative AI.

“We undoubtedly would have put extra thought into the identify had we identified our work would change into so widespread,” Lewis mentioned in an interview from Singapore, the place he was sharing his concepts with a regional convention of database builders.

“We at all times deliberate to have a nicer sounding identify, however when it got here time to put in writing the paper, nobody had a greater concept,” mentioned Lewis, who now leads a RAG workforce at AI startup Cohere.

So, What Is Retrieval-Augmented Technology (RAG)?

Retrieval-augmented era is a method for enhancing the accuracy and reliability of generative AI fashions with info fetched from particular and related information sources.

In different phrases, it fills a spot in how LLMs work. Beneath the hood, LLMs are neural networks, usually measured by what number of parameters they include. An LLM’s parameters primarily characterize the overall patterns of how people use phrases to type sentences.

That deep understanding, generally known as parameterized data, makes LLMs helpful in responding to basic prompts. Nevertheless, it doesn’t serve customers who need a deeper dive into a particular kind of data.

Combining Inner, Exterior Sources

Lewis and colleagues developed retrieval-augmented era to hyperlink generative AI providers to exterior assets, particularly ones wealthy within the newest technical particulars.

The paper, with coauthors from the previous Fb AI Analysis (now Meta AI), College School London and New York College, known as RAG “a general-purpose fine-tuning recipe” as a result of it may be utilized by practically any LLM to attach with virtually any exterior useful resource.

Constructing Person Belief

Retrieval-augmented era offers fashions sources they will cite, like footnotes in a analysis paper, so customers can verify any claims. That builds belief.

What’s extra, the method will help fashions clear up ambiguity in a person question. It additionally reduces the likelihood {that a} mannequin will give a really believable however incorrect reply, a phenomenon known as hallucination.

One other nice benefit of RAG is it’s comparatively straightforward. A weblog by Lewis and three of the paper’s coauthors mentioned builders can implement the method with as few as 5 strains of code.

That makes the strategy quicker and cheaper than retraining a mannequin with further datasets. And it lets customers hot-swap new sources on the fly.

How Individuals Are Utilizing RAG

With retrieval-augmented era, customers can primarily have conversations with information repositories, opening up new sorts of experiences. This implies the purposes for RAG might be a number of occasions the variety of accessible datasets.

For instance, a generative AI mannequin supplemented with a medical index might be an amazing assistant for a physician or nurse. Monetary analysts would profit from an assistant linked to market information.

Actually, virtually any enterprise can flip its technical or coverage manuals, movies or logs into assets known as data bases that may improve LLMs. These sources can allow use circumstances corresponding to buyer or subject help, worker coaching and developer productiveness.

The broad potential is why corporations together with AWS, IBM, Glean, Google, Microsoft, NVIDIA, Oracle and Pinecone are adopting RAG.

Getting Began With Retrieval-Augmented Technology

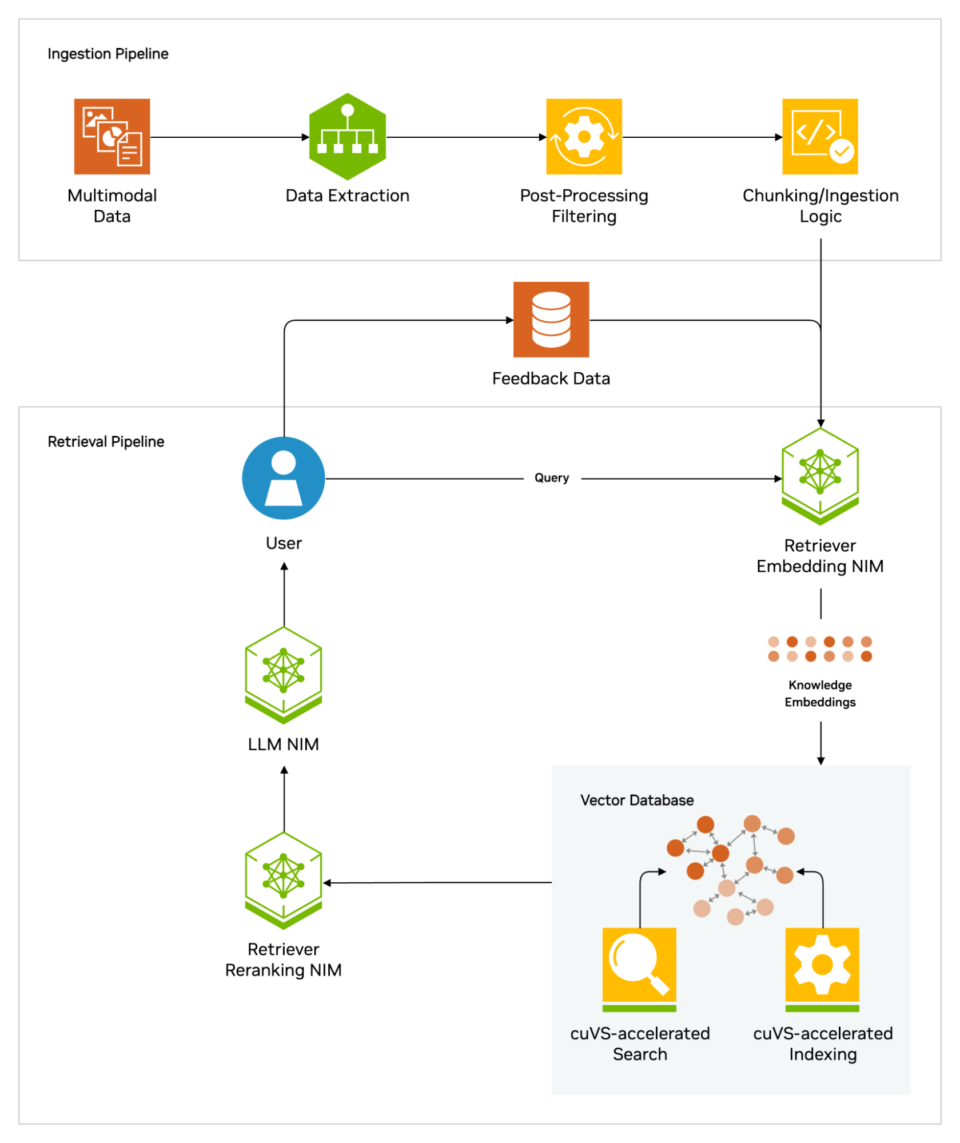

The NVIDIA AI Blueprint for RAG helps builders construct pipelines to attach their AI purposes to enterprise information utilizing industry-leading know-how. This reference structure offers builders with a basis for constructing scalable and customizable retrieval pipelines that ship excessive accuracy and throughput.

The blueprint can be utilized as is, or mixed with different NVIDIA Blueprints for superior use circumstances together with digital people and AI assistants. For instance, the blueprint for AI assistants empowers organizations to construct AI brokers that may rapidly scale their customer support operations with generative AI and RAG.

As well as, builders and IT groups can strive the free, hands-on NVIDIA LaunchPad lab for constructing AI chatbots with RAG, enabling quick and correct responses from enterprise information.

All of those assets use NVIDIA NeMo Retriever, which offers main, large-scale retrieval accuracy and NVIDIA NIM microservices for simplifying safe, high-performance AI deployment throughout clouds, information facilities and workstations. These are supplied as a part of the NVIDIA AI Enterprise software program platform for accelerating AI growth and deployment.

Getting the most effective efficiency for RAG workflows requires large quantities of reminiscence and compute to maneuver and course of information. The NVIDIA GH200 Grace Hopper Superchip, with its 288GB of quick HBM3e reminiscence and eight petaflops of compute, is right — it could actually ship a 150x speedup over utilizing a CPU.

As soon as corporations get aware of RAG, they will mix a wide range of off-the-shelf or customized LLMs with inside or exterior data bases to create a variety of assistants that assist their workers and prospects.

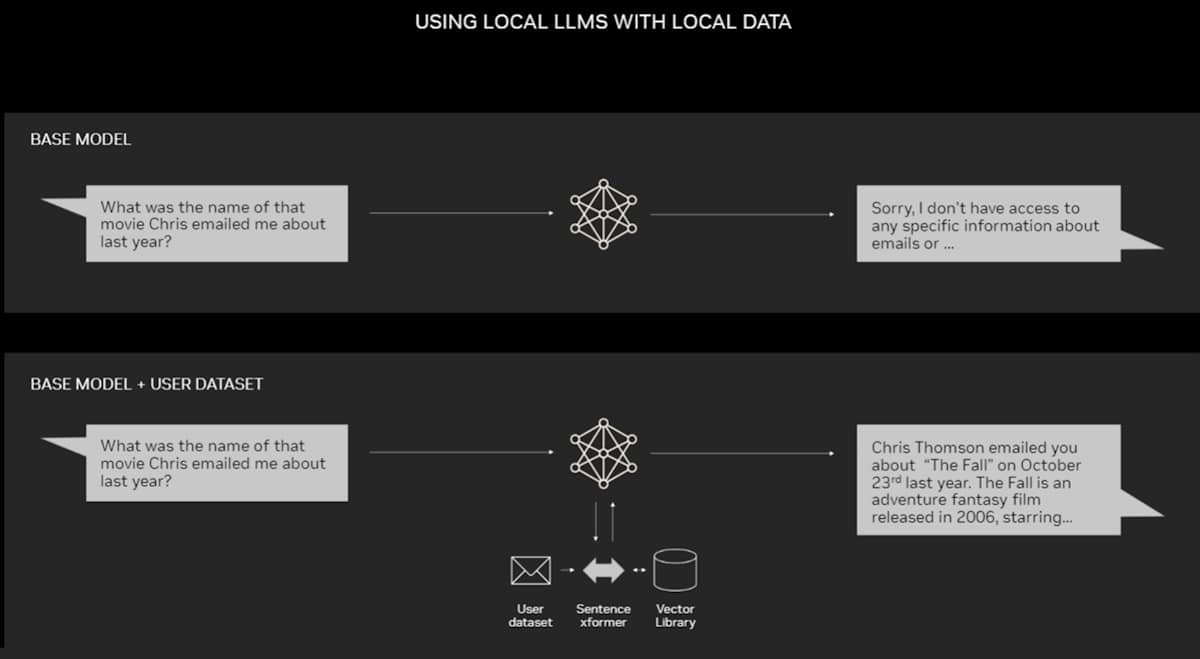

RAG doesn’t require a knowledge middle. LLMs are debuting on Home windows PCs, due to NVIDIA software program that permits all kinds of purposes customers can entry even on their laptops.

PCs geared up with NVIDIA RTX GPUs can now run some AI fashions regionally. Through the use of RAG on a PC, customers can hyperlink to a non-public data supply – whether or not that be emails, notes or articles – to enhance responses. The person can then really feel assured that their information supply, prompts and response all stay personal and safe.

A latest weblog offers an instance of RAG accelerated by TensorRT-LLM for Home windows to get higher outcomes quick.

The Historical past of RAG

The roots of the method return at the least to the early Seventies. That’s when researchers in info retrieval prototyped what they known as question-answering methods, apps that use pure language processing (NLP) to entry textual content, initially in slim matters corresponding to baseball.

The ideas behind this type of textual content mining have remained pretty fixed through the years. However the machine studying engines driving them have grown considerably, rising their usefulness and recognition.

Within the mid-Nineteen Nineties, the Ask Jeeves service, now Ask.com, popularized query answering with its mascot of a well-dressed valet. IBM’s Watson turned a TV celeb in 2011 when it handily beat two human champions on the Jeopardy! recreation present.

At present, LLMs are taking question-answering methods to a complete new stage.

Insights From a London Lab

The seminal 2020 paper arrived as Lewis was pursuing a doctorate in NLP at College School London and dealing for Meta at a brand new London AI lab. The workforce was trying to find methods to pack extra data into an LLM’s parameters and utilizing a benchmark it developed to measure its progress.

Constructing on earlier strategies and impressed by a paper from Google researchers, the group “had this compelling imaginative and prescient of a educated system that had a retrieval index in the midst of it, so it may study and generate any textual content output you wished,” Lewis recalled.

When Lewis plugged into the work in progress a promising retrieval system from one other Meta workforce, the primary outcomes had been unexpectedly spectacular.

“I confirmed my supervisor and he mentioned, ‘Whoa, take the win. This type of factor doesn’t occur fairly often,’ as a result of these workflows might be exhausting to arrange appropriately the primary time,” he mentioned.

Lewis additionally credit main contributions from workforce members Ethan Perez and Douwe Kiela, then of New York College and Fb AI Analysis, respectively.

When full, the work, which ran on a cluster of NVIDIA GPUs, confirmed tips on how to make generative AI fashions extra authoritative and reliable. It’s since been cited by lots of of papers that amplified and prolonged the ideas in what continues to be an energetic space of analysis.

How Retrieval-Augmented Technology Works

At a excessive stage, right here’s how retrieval-augmented era works.

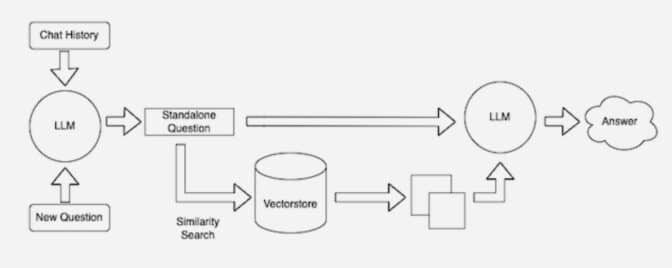

When customers ask an LLM a query, the AI mannequin sends the question to a different mannequin that converts it right into a numeric format so machines can learn it. The numeric model of the question is typically known as an embedding or a vector.

The embedding mannequin then compares these numeric values to vectors in a machine-readable index of an accessible data base. When it finds a match or a number of matches, it retrieves the associated information, converts it to human-readable phrases and passes it again to the LLM.

Lastly, the LLM combines the retrieved phrases and its personal response to the question right into a last reply it presents to the person, probably citing sources the embedding mannequin discovered.

Conserving Sources Present

Within the background, the embedding mannequin repeatedly creates and updates machine-readable indices, generally known as vector databases, for brand spanking new and up to date data bases as they change into accessible.

Many builders discover LangChain, an open-source library, might be notably helpful in chaining collectively LLMs, embedding fashions and data bases. NVIDIA makes use of LangChain in its reference structure for retrieval-augmented era.

The LangChain neighborhood offers its personal description of a RAG course of.

The way forward for generative AI lies in agentic AI — the place LLMs and data bases are dynamically orchestrated to create autonomous assistants. These AI-driven brokers can improve decision-making, adapt to complicated duties and ship authoritative, verifiable outcomes for customers.