Synthetic intelligence (AI) is usually hailed as the following frontier of innovation, reworking enterprise operations and delivering unprecedented efficiencies. With capabilities doubling each 70 days, AI’s march ahead has by no means been extra relentless—or extra thrilling. But for a lot of C-Suite execs, homeowners, and enterprise expertise managers, the true query isn’t whether or not to undertake AI however how to take action securely and responsibly.

We’re excited to announce our new partnership with Hatz.ai—a collaboration leading to a Safe AI platform constructed for small to medium companies and enterprises. Just lately, our press releases on this partnership acquired nationwide protection by the Related Press (AP Information), San Francisco’s Kron 4 (Kron 4 protection), and Portland’s Koin 6 (Koin 6 protection). This highlight displays the rising demand for options that stability AI innovation with strong information safety.

What follows is a journey by way of the AI panorama—exploring advantages, dangers, and finest practices in adopting Enterprise AI instruments with Privateness and Safety.

The AI Journey Begins: “AI Is Like Having Infinite Interns”

As Ethan Mollick, Affiliate Professor at Wharton’s Generative AI Lab, places it:

“AI is like having infinite interns—quick, keen, tireless, however needing path and bounds.”

AI isn’t magic; it’s a device. It delivers the very best outcomes solely if you practice it, information it, and preserve management. For companies that juggle speedy innovation alongside information governance, it’s essential to do not forget that a scarcity of construction or oversight could be detrimental.

Doing Nothing Comes With Threat

When organizations delay or keep away from AI adoption, they face severe pitfalls:

- Shadow AI Utilization: Workers might use AI no matter approval, creating unregulated information trails.

- Information Governance & IP Points: Delicate info might find yourself in exterior techniques.

- Person Entry Management Weaknesses: With out centralized oversight, making certain constant safety and utilization insurance policies is tough.

- Unstandardized Strategies: Totally different groups would possibly undertake completely different AI instruments, leading to a fragmented strategy.

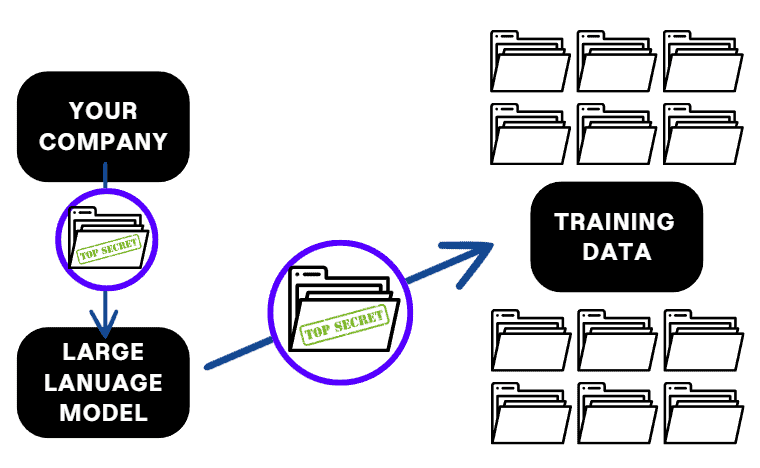

- Information Safety & Privateness Considerations: Free platforms, like public ChatGPT or different LLMs, might practice in your information.

- AI Hallucinations: With out coaching, workers threat misusing AI outputs, generally accepting incorrect info.

Bear in mind: If the product is free, you are the product. Free AI platforms typically depend on person information to refine their fashions. That’s no higher than sharing paperwork by way of private Gmail or Dropbox for delicate firm information.

Contemplating AI’s exponential acceleration, “the very best time to start out studying AI was yesterday. The second finest time is at present.”

Public AI Platforms and Your Information

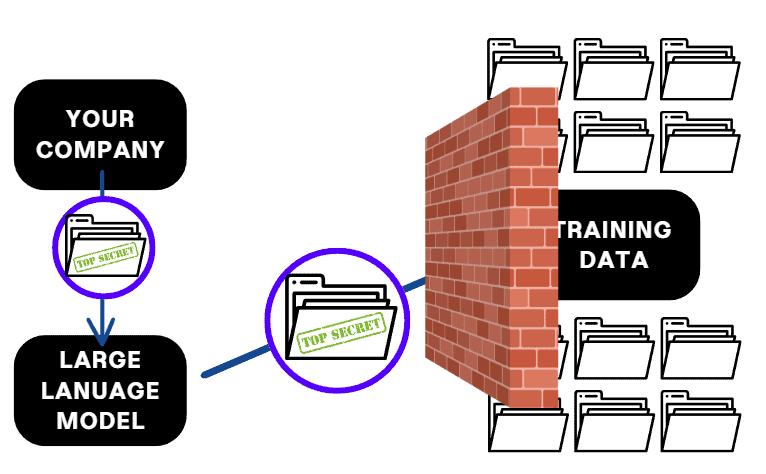

Heroic’s Safe AI Platform Powered by Hatz.ai and Your Information

New Challenges in Managed & Safe AI

Past information safety alone, we’re now seeing two extra hurdles that underscore why organizations want a versatile and manageable AI atmosphere.

Quickly Evolving AI Fashions

AI fashions appear to be leapfrogging one another month-to-month—if not weekly—on numerous benchmarks. Some excel at writing, others at software program coding, and but others at math. This fixed shuffling of which mannequin is “finest” makes it unwise to decide to a single product or ecosystem.

Furthermore, it’s unclear which AI platforms will nonetheless exist in two or three years. Betting your whole operation on a single AI vendor dangers obsolescence if that vendor ceases to innovate or, worse, shuts down.

The place Heroic’s Safe AI Platform Excels

Powered by Hatz.ai, our Safe AI answer helps a number of main LLMs, together with OpenAI, Anthropic, Google, Meta, DeepSeek, and X.ai. This setup helps you to select the finest mannequin for the process at hand (e.g., writing, coding, analytics) with out vendor lock-in. It’s your insurance coverage coverage in opposition to the quickly shifting AI panorama.

Comparability of LLMs Supported by Safe AI Powered by Hatz.ai

It is a snapshot of the favored LLMs which are accessible on the Hatz AI platform. Please observe that the context window and max output token limits are approximate and never all the time correct, relying on the kind of request and different elements.

| Firm | Mannequin | Reasoning?Try our “Reasoning Fashions” article | Imaginative and prescient | Enter Tokens / Phrases*** | Output Tokens / Phrases | Data Cutoff Date |

|---|---|---|---|---|---|---|

| OpenAI | GPT 3.5 Turbo | 16K / 12K | 4K / 3K | September 2021 | ||

| OpenAI | GPT 4 | 8K / 6K | 8K / 6K | December 2023 | ||

| OpenAI | GPT 4o | Sure | 128K / 96K | 4K / 3K | December 2023 | |

| OpenAI | o1 | Sure | 200K / 150K | 100K / 75K | December 2023 | |

| OpenAI | o3-mini | Sure | 200K / 150K | 100K / 75K | December 2023 | |

| OpenAI | GPT 4.5 Preview | Sure | 128K / 96K | 16K / 12K | December 2023 | |

| Anthropic | Claude 3 Sonnet | 200K / 150K | 4K / 3K | August 2023 | ||

| Anthropic | Claude 3 Haiku | 200K / 150K | 4K / 3K | August 2023 | ||

| Anthropic | Claude 3.5 Sonnet v2 | Sure | 200K / 150K | 8K / 6K | April 2024 | |

| Anthropic | Claude 3.7 Sonnet | Sure (hybrid) | Sure | 200K / 150K | 8K / 6K | October 2024 |

| xAI | Grok 2 | Sure | 132K / 97K | 132K / 97K | July 2024 | |

| xAI | Grok 2 Imaginative and prescient | Sure | Sure | 132K / 97K | 132K / 97K | July 2024 |

| Gemini 1.5 Flash | Sure | 1 Million / 750K | 8K / 6K | November 2023 | ||

| Gemini 1.5 Professional | Sure | 2 Million / 1.5 Million | 8K / 6K | November 2023 | ||

| Gemini 2.0 Flash | Sure | 1 Million / 750K | 8K / 6K | June 2024 | ||

| Gemini 2.0 Flash Lite | Sure | 1 Million / 750K | 8K / 6K | June 2024 | ||

| MistralAI | Mistral Giant | 32K / 24K | 4K / 3K | October 2023 | ||

| MistralAI | Mistral 7B Instruct | |||||

| Mistral AI | Mistral 8x7B Instruct | |||||

| DeepSeek | DeepSeek R1 | Sure | Sure | 32K / 24K | 32K / 24K | July 2024 |

| Meta | Llama 3.2 1B Instruct | |||||

| Meta | Llama 3.2 11B Instruct | Sure | ||||

| Meta | Llama 3.2 70B Instruct | |||||

| Meta | Llama 3.2 8B Instruct |

**For probably the most up-to-date supported mannequin info, please go to right here.

Use Circumstances by Firm

| OpenAI | Sentiment Evaluation, Content material Technology, Course of Automation |

| Anthropic | RFP Responses, Content material Technology, Coding |

| xAI | Reasoning |

| Analyzing giant paperwork, Summarizing reviews | |

| Mistral | Translating, Educated with non-English information |

| Meta | Buyer Help, Small, quick duties |

| DeepSeek | Reasoning |

Lack of Sturdy Administration for SMEs

Many publicly accessible LLMs are nice for experimenting, however they typically lack enterprise-grade administration options. The exceptions are sometimes these totally baked into productiveness suites, equivalent to Microsoft’s CoPilot in Microsoft 365 or Google’s Gemini in Google Workspace. But even these revolve across the vendor’s closed ecosystem, limiting flexibility.

- Administration Capabilities to Think about: Simple person onboarding/offboarding, single sign-on (SSO), data-sharing insurance policies, and safety settings.

- Enterprise Plans: Some suppliers, like OpenAI’s ChatGPT Enterprise, solely supply strong admin controls if you buy a minimal of 150 seats or purchase a personal sandbox atmosphere—an costly dedication for a lot of SMEs.

Against this, Heroic’s Safe AI platform is designed with SMEs and enterprises in thoughts—no minimal person thresholds or pressured tie-ins to a single platform. You get flexibility and centralized administration in a single system.

Introducing Safe AI for Small Enterprise: Powered by Hatz.ai

On this evolving panorama, our Safe AI answer presents:

- Multi-Mannequin Help: Entry to a wide range of LLMs—choose the suitable AI for the suitable job.

- Personal & Secure: Information stays below strict organizational management, by no means used for exterior mannequin coaching.

- Compliance & Governance: Constructed with GDPR and HIPAA issues, offering excessive confidence for regulated industries.

- Centralized Administration: Handle person entry, information insurance policies, and safety settings from one intuitive dashboard.

Whereas we’re thrilled about our partnership with Hatz.ai, we weren’t a part of the fashions’ unique growth—we merely convey these strong capabilities to companies in search of dependable, safe AI.

Your AI Journey: Crawl, Stroll, Run

For a lot of organizations, AI adoption can nonetheless be intimidating—resulting in expertise skepticism, job substitute fears, or worries about talent obsolescence. Our Safe AI platform helps the confirmed Crawl-Stroll-Run strategy:

Crawl (Common)

- AI Use Circumstances: Easy, speedy duties (electronic mail summaries, assembly observe technology) that profit a large viewers.

- Minimal Studying Curve: Good for cross-departmental adoption with restricted coaching required.

Stroll (Templated)

- AI Use Circumstances: Advanced duties (superior analytics, partial RFP responses) that require reasonable customization.

- Reusability: As soon as templates are set, they are often replicated throughout a number of groups.

Run (Tailor-made)

- AI Use Circumstances: Specialised, high-impact initiatives distinctive to particular enterprise items (customized gross sales forecasting, subtle compliance checks).

- Excessive ROI: Requires expert-level implementation however can ship important aggressive benefits.

Sensible Expertise: 10 Hours to Proficiency

Studying to make use of AI successfully is like studying to trip a motorbike—a bit of wobbly at first, but it surely turns into second nature with apply. Count on to speculate 10 hours of targeted hands-on time. Our Safe AI platform speeds this studying curve with a complete coaching portal:

- Primary AI Terminology & Ideas

- Understanding Totally different LLMs

- Immediate Engineering

- Constructing Automations & Superior Instruments

Inside this timeframe, workers from finance to operations can discover ways to leverage Enterprise AI instruments with Privateness and Safety responsibly.

Normal LLM vs. Safe AI: A Important Distinction

(Picture Placeholder: An ordinary LLM diagram that trains on user-provided information vs. a Safe AI platform that doesn’t practice on person information.)

Normal LLM

- Information Aggregation: Public fashions ingest information from numerous customers, mixing personal and public domains.

- Open Coaching: Your proprietary prompts can turn into a part of future coaching units.

- Safety Gaps: Minimal or no management over how information is saved or used.

Safe AI Platform

- Managed Setting: Information stays behind enterprise-grade safety techniques.

- No Exterior Coaching: Your confidential information by no means enriches exterior fashions.

- Administration Options: Centralized person administration, detailed exercise logs, and superior safety insurance policies.

This distinction underscores why organizations ought to undertake a platform particularly designed for safe, multi-model AI utilization.

Overcoming Widespread Challenges and Fears

By addressing these considerations head-on, you’ll pave the best way for a profitable AI rollout:

- Know-how Skepticism: Pilot initiatives with fast wins reveal tangible ROI.

- Job Alternative Fears: AI handles repetitive duties, enabling workers to deal with strategic, higher-level work.

- Moral & Information Privateness Considerations: A sturdy governance mannequin, information encryption, and role-based entry instill confidence.

- Ability Obsolescence: With satisfactory coaching, workers shortly turn into AI-savvy, turning apprehension into enthusiasm.

Conclusion: Embrace the AI Journey

From quickly evolving AI fashions to gaps in public LLM administration, the AI panorama is as difficult as it’s thrilling. However with the Crawl-Stroll-Run methodology and a Safe AI platform that doesn’t lock you right into a single vendor, you may confidently chart a course for sustainable AI adoption.

As Ethan Mollick reminds us, AI could be an infinite variety of interns—however provided that you present clear steering and bounds. Our partnership with Hatz.ai presents exactly that stability, making certain your group stays each revolutionary and compliant in an period the place “the very best time to start out with AI was yesterday, and the second finest time is at present.”