For a robotic, the true world is lots to absorb. Making sense of each information level in a scene can take an enormous quantity of computational time and effort. Utilizing that info to then determine tips on how to finest assist a human is a good thornier train.

Now, MIT roboticists have a solution to reduce via the information noise, to assist robots concentrate on the options in a scene which can be most related for aiding people.

Their method, which they aptly dub “Relevance,” allows a robotic to make use of cues in a scene, reminiscent of audio and visible info, to find out a human’s goal after which shortly determine the objects which can be more than likely to be related in fulfilling that goal. The robotic then carries out a set of maneuvers to soundly supply the related objects or actions to the human.

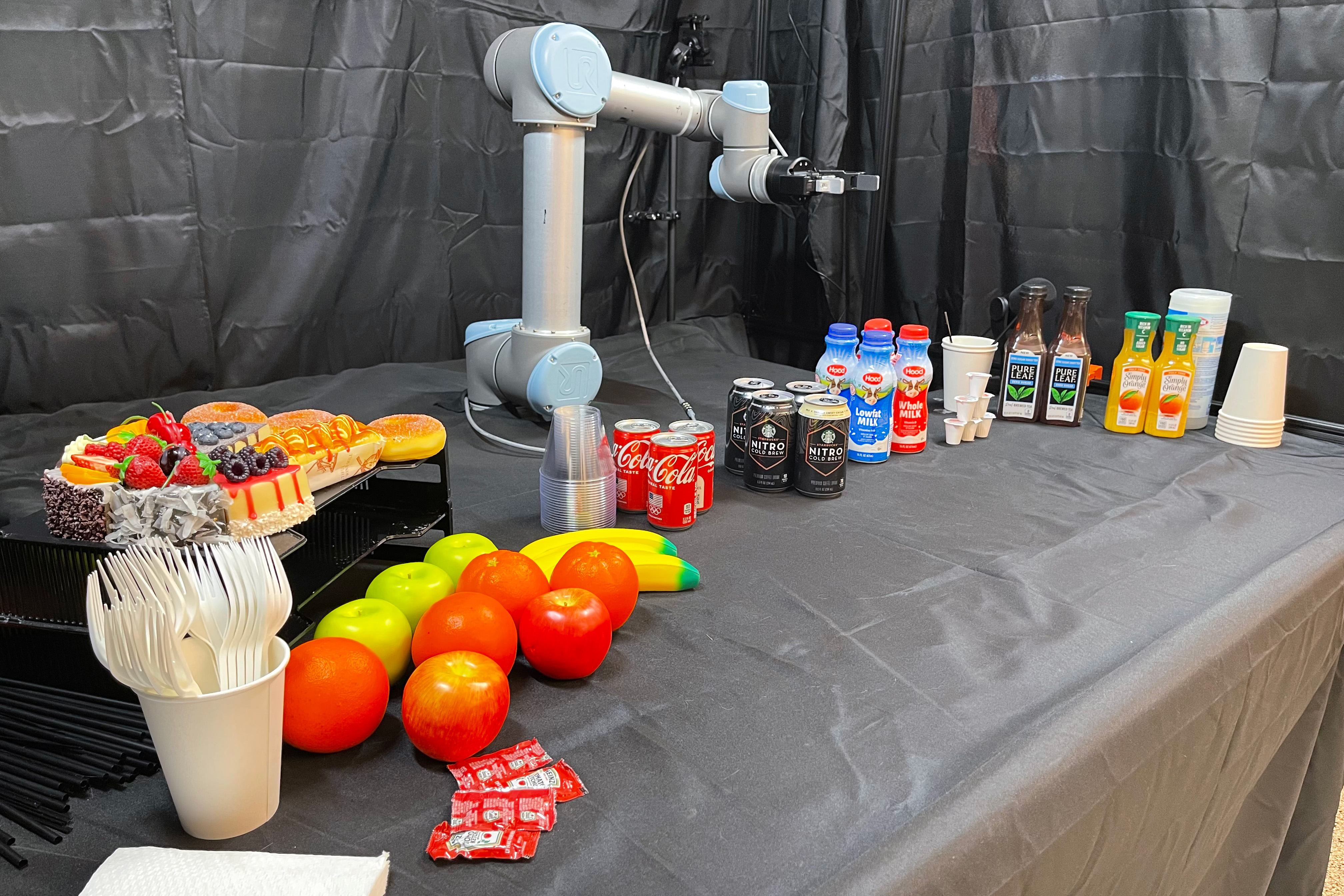

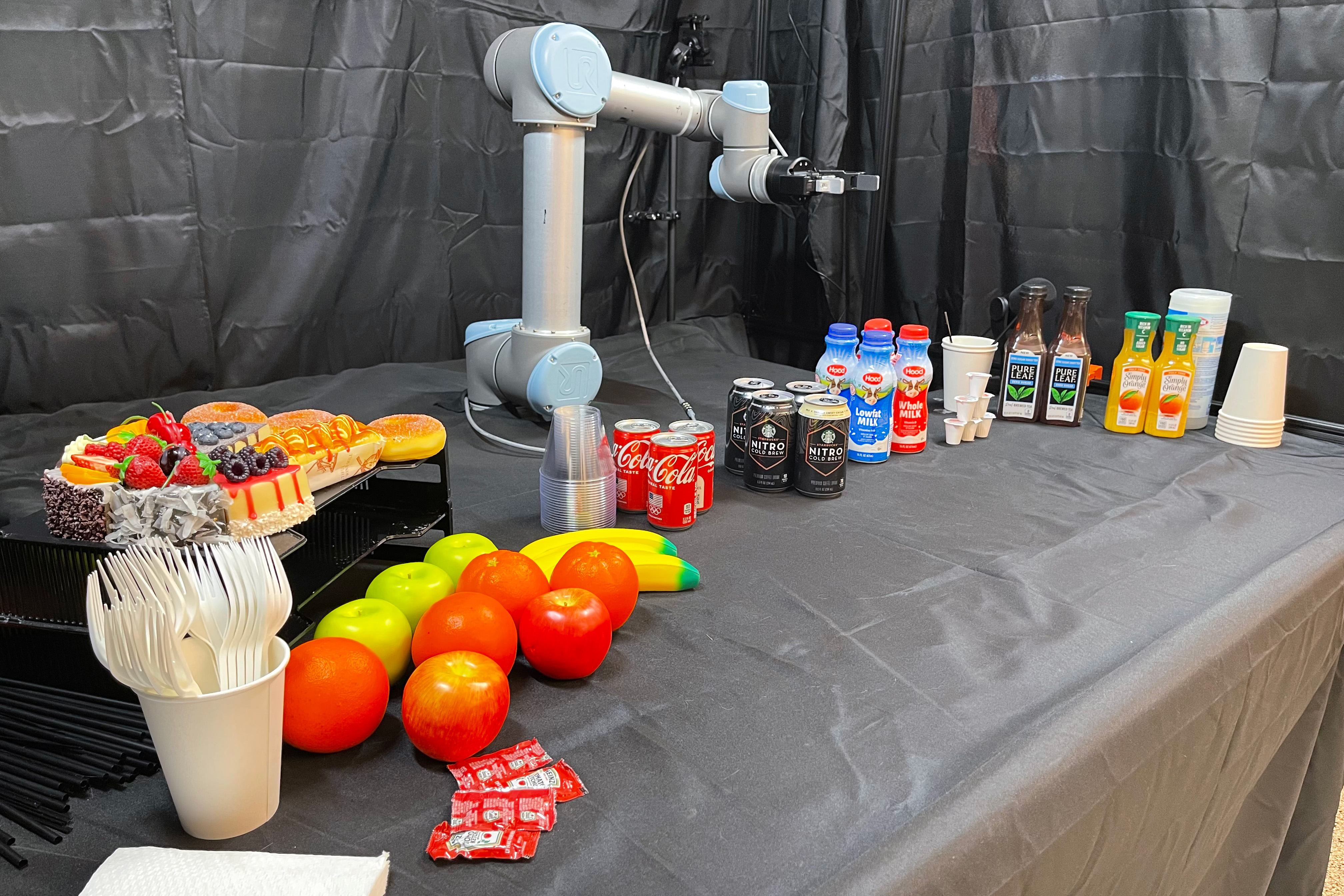

The researchers demonstrated the method with an experiment that simulated a convention breakfast buffet. They arrange a desk with varied fruits, drinks, snacks, and tableware, together with a robotic arm outfitted with a microphone and digital camera. Making use of the brand new Relevance method, they confirmed that the robotic was capable of accurately determine a human’s goal and appropriately help them in numerous situations.

In a single case, the robotic took in visible cues of a human reaching for a can of ready espresso, and shortly handed the individual milk and a stir stick. In one other situation, the robotic picked up on a dialog between two individuals speaking about espresso, and supplied them a can of espresso and creamer.

Total, the robotic was capable of predict a human’s goal with 90 p.c accuracy and to determine related objects with 96 p.c accuracy. The strategy additionally improved a robotic’s security, decreasing the variety of collisions by greater than 60 p.c, in comparison with finishing up the identical duties with out making use of the brand new methodology.

“This method of enabling relevance may make it a lot simpler for a robotic to work together with people,” says Kamal Youcef-Toumi, professor of mechanical engineering at MIT. “A robotic wouldn’t should ask a human so many questions on what they want. It will simply actively take info from the scene to determine tips on how to assist.”

Youcef-Toumi’s group is exploring how robots programmed with Relevance might help in good manufacturing and warehouse settings, the place they envision robots working alongside and intuitively aiding people.

Youcef-Toumi, together with graduate college students Xiaotong Zhang and Dingcheng Huang, will current their new methodology on the IEEE Worldwide Convention on Robotics and Automation (ICRA) in Might. The work builds on one other paper offered at ICRA the earlier yr.

Discovering focus

The workforce’s method is impressed by our personal capacity to gauge what’s related in day by day life. People can filter out distractions and concentrate on what’s essential, due to a area of the mind generally known as the Reticular Activating System (RAS). The RAS is a bundle of neurons within the brainstem that acts subconsciously to prune away pointless stimuli, in order that an individual can consciously understand the related stimuli. The RAS helps to stop sensory overload, protecting us, for instance, from fixating on each single merchandise on a kitchen counter, and as a substitute serving to us to concentrate on pouring a cup of espresso.

“The superb factor is, these teams of neurons filter all the pieces that isn’t essential, after which it has the mind concentrate on what’s related on the time,” Youcef-Toumi explains. “That’s mainly what our proposition is.”

He and his workforce developed a robotic system that broadly mimics the RAS’s capacity to selectively course of and filter info. The method consists of 4 predominant phases. The primary is a watch-and-learn “notion” stage, throughout which a robotic takes in audio and visible cues, as an illustration from a microphone and digital camera, which can be constantly fed into an AI “toolkit.” This toolkit can embody a big language mannequin (LLM) that processes audio conversations to determine key phrases and phrases, and varied algorithms that detect and classify objects, people, bodily actions, and job goals. The AI toolkit is designed to run constantly within the background, equally to the unconscious filtering that the mind’s RAS performs.

The second stage is a “set off verify” part, which is a periodic verify that the system performs to evaluate if something essential is going on, reminiscent of whether or not a human is current or not. If a human has stepped into the setting, the system’s third part will kick in. This part is the guts of the workforce’s system, which acts to find out the options within the setting which can be more than likely related to help the human.

To determine relevance, the researchers developed an algorithm that takes in real-time predictions made by the AI toolkit. For example, the toolkit’s LLM could choose up the key phrase “espresso,” and an action-classifying algorithm could label an individual reaching for a cup as having the target of “making espresso.” The workforce’s Relevance methodology would issue on this info to first decide the “class” of objects which have the very best likelihood of being related to the target of “making espresso.” This may mechanically filter out courses reminiscent of “fruits” and “snacks,” in favor of “cups” and “creamers.” The algorithm would then additional filter inside the related courses to find out essentially the most related “components.” For example, primarily based on visible cues of the setting, the system could label a cup closest to an individual as extra related — and useful — than a cup that’s farther away.

Within the fourth and remaining part, the robotic would then take the recognized related objects and plan a path to bodily entry and supply the objects to the human.

Helper mode

The researchers examined the brand new system in experiments that simulate a convention breakfast buffet. They selected this situation primarily based on the publicly accessible Breakfast Actions Dataset, which includes movies and pictures of typical actions that individuals carry out throughout breakfast time, reminiscent of getting ready espresso, cooking pancakes, making cereal, and frying eggs. Actions in every video and picture are labeled, together with the general goal (frying eggs, versus making espresso).

Utilizing this dataset, the workforce examined varied algorithms of their AI toolkit, such that, when receiving actions of an individual in a brand new scene, the algorithms may precisely label and classify the human duties and goals, and the related related objects.

Of their experiments, they arrange a robotic arm and gripper and instructed the system to help people as they approached a desk stuffed with varied drinks, snacks, and tableware. They discovered that when no people have been current, the robotic’s AI toolkit operated constantly within the background, labeling and classifying objects on the desk.

When, throughout a set off verify, the robotic detected a human, it snapped to consideration, turning on its Relevance part and shortly figuring out objects within the scene that have been more than likely to be related, primarily based on the human’s goal, which was decided by the AI toolkit.

“Relevance can information the robotic to generate seamless, clever, protected, and environment friendly help in a extremely dynamic setting,” says co-author Zhang.

Going ahead, the workforce hopes to use the system to situations that resemble office and warehouse environments, in addition to to different duties and goals sometimes carried out in family settings.

“I’d wish to check this method in my dwelling to see, as an illustration, if I’m studying the paper, possibly it will possibly convey me espresso. If I’m doing laundry, it will possibly convey me a laundry pod. If I’m doing restore, it will possibly convey me a screwdriver,” Zhang says. “Our imaginative and prescient is to allow human-robot interactions that may be far more pure and fluent.”

This analysis was made attainable by the help and partnership of King Abdulaziz Metropolis for Science and Know-how (KACST) via the Heart for Advanced Engineering Programs at MIT and KACST.

For a robotic, the true world is lots to absorb. Making sense of each information level in a scene can take an enormous quantity of computational time and effort. Utilizing that info to then determine tips on how to finest assist a human is a good thornier train.

Now, MIT roboticists have a solution to reduce via the information noise, to assist robots concentrate on the options in a scene which can be most related for aiding people.

Their method, which they aptly dub “Relevance,” allows a robotic to make use of cues in a scene, reminiscent of audio and visible info, to find out a human’s goal after which shortly determine the objects which can be more than likely to be related in fulfilling that goal. The robotic then carries out a set of maneuvers to soundly supply the related objects or actions to the human.

The researchers demonstrated the method with an experiment that simulated a convention breakfast buffet. They arrange a desk with varied fruits, drinks, snacks, and tableware, together with a robotic arm outfitted with a microphone and digital camera. Making use of the brand new Relevance method, they confirmed that the robotic was capable of accurately determine a human’s goal and appropriately help them in numerous situations.

In a single case, the robotic took in visible cues of a human reaching for a can of ready espresso, and shortly handed the individual milk and a stir stick. In one other situation, the robotic picked up on a dialog between two individuals speaking about espresso, and supplied them a can of espresso and creamer.

Total, the robotic was capable of predict a human’s goal with 90 p.c accuracy and to determine related objects with 96 p.c accuracy. The strategy additionally improved a robotic’s security, decreasing the variety of collisions by greater than 60 p.c, in comparison with finishing up the identical duties with out making use of the brand new methodology.

“This method of enabling relevance may make it a lot simpler for a robotic to work together with people,” says Kamal Youcef-Toumi, professor of mechanical engineering at MIT. “A robotic wouldn’t should ask a human so many questions on what they want. It will simply actively take info from the scene to determine tips on how to assist.”

Youcef-Toumi’s group is exploring how robots programmed with Relevance might help in good manufacturing and warehouse settings, the place they envision robots working alongside and intuitively aiding people.

Youcef-Toumi, together with graduate college students Xiaotong Zhang and Dingcheng Huang, will current their new methodology on the IEEE Worldwide Convention on Robotics and Automation (ICRA) in Might. The work builds on one other paper offered at ICRA the earlier yr.

Discovering focus

The workforce’s method is impressed by our personal capacity to gauge what’s related in day by day life. People can filter out distractions and concentrate on what’s essential, due to a area of the mind generally known as the Reticular Activating System (RAS). The RAS is a bundle of neurons within the brainstem that acts subconsciously to prune away pointless stimuli, in order that an individual can consciously understand the related stimuli. The RAS helps to stop sensory overload, protecting us, for instance, from fixating on each single merchandise on a kitchen counter, and as a substitute serving to us to concentrate on pouring a cup of espresso.

“The superb factor is, these teams of neurons filter all the pieces that isn’t essential, after which it has the mind concentrate on what’s related on the time,” Youcef-Toumi explains. “That’s mainly what our proposition is.”

He and his workforce developed a robotic system that broadly mimics the RAS’s capacity to selectively course of and filter info. The method consists of 4 predominant phases. The primary is a watch-and-learn “notion” stage, throughout which a robotic takes in audio and visible cues, as an illustration from a microphone and digital camera, which can be constantly fed into an AI “toolkit.” This toolkit can embody a big language mannequin (LLM) that processes audio conversations to determine key phrases and phrases, and varied algorithms that detect and classify objects, people, bodily actions, and job goals. The AI toolkit is designed to run constantly within the background, equally to the unconscious filtering that the mind’s RAS performs.

The second stage is a “set off verify” part, which is a periodic verify that the system performs to evaluate if something essential is going on, reminiscent of whether or not a human is current or not. If a human has stepped into the setting, the system’s third part will kick in. This part is the guts of the workforce’s system, which acts to find out the options within the setting which can be more than likely related to help the human.

To determine relevance, the researchers developed an algorithm that takes in real-time predictions made by the AI toolkit. For example, the toolkit’s LLM could choose up the key phrase “espresso,” and an action-classifying algorithm could label an individual reaching for a cup as having the target of “making espresso.” The workforce’s Relevance methodology would issue on this info to first decide the “class” of objects which have the very best likelihood of being related to the target of “making espresso.” This may mechanically filter out courses reminiscent of “fruits” and “snacks,” in favor of “cups” and “creamers.” The algorithm would then additional filter inside the related courses to find out essentially the most related “components.” For example, primarily based on visible cues of the setting, the system could label a cup closest to an individual as extra related — and useful — than a cup that’s farther away.

Within the fourth and remaining part, the robotic would then take the recognized related objects and plan a path to bodily entry and supply the objects to the human.

Helper mode

The researchers examined the brand new system in experiments that simulate a convention breakfast buffet. They selected this situation primarily based on the publicly accessible Breakfast Actions Dataset, which includes movies and pictures of typical actions that individuals carry out throughout breakfast time, reminiscent of getting ready espresso, cooking pancakes, making cereal, and frying eggs. Actions in every video and picture are labeled, together with the general goal (frying eggs, versus making espresso).

Utilizing this dataset, the workforce examined varied algorithms of their AI toolkit, such that, when receiving actions of an individual in a brand new scene, the algorithms may precisely label and classify the human duties and goals, and the related related objects.

Of their experiments, they arrange a robotic arm and gripper and instructed the system to help people as they approached a desk stuffed with varied drinks, snacks, and tableware. They discovered that when no people have been current, the robotic’s AI toolkit operated constantly within the background, labeling and classifying objects on the desk.

When, throughout a set off verify, the robotic detected a human, it snapped to consideration, turning on its Relevance part and shortly figuring out objects within the scene that have been more than likely to be related, primarily based on the human’s goal, which was decided by the AI toolkit.

“Relevance can information the robotic to generate seamless, clever, protected, and environment friendly help in a extremely dynamic setting,” says co-author Zhang.

Going ahead, the workforce hopes to use the system to situations that resemble office and warehouse environments, in addition to to different duties and goals sometimes carried out in family settings.

“I’d wish to check this method in my dwelling to see, as an illustration, if I’m studying the paper, possibly it will possibly convey me espresso. If I’m doing laundry, it will possibly convey me a laundry pod. If I’m doing restore, it will possibly convey me a screwdriver,” Zhang says. “Our imaginative and prescient is to allow human-robot interactions that may be far more pure and fluent.”

This analysis was made attainable by the help and partnership of King Abdulaziz Metropolis for Science and Know-how (KACST) via the Heart for Advanced Engineering Programs at MIT and KACST.