Palko writes:

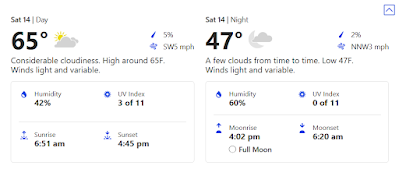

Final Saturday, I [Palko] checked Google and noticed the forecast for per week from that day was a 75% likelihood of rain. That might have been excellent information–it’s been dry in Southern California this winter–maybe too good to be true. I checked a few competing websites and noticed no indications of rain within the subsequent seven days anyplace within the neighborhood. A few hours later I checked again in and Google was now in keeping with all the opposite forecasts with 5% or much less predicted.

As of Thursday, Google is right down to 0% for Saturday whereas the Climate Channel has 18%.

Wow. I’ve observed typically that completely different on-line sources give a lot completely different climate forecasts, even for the following day. However I’ve by no means seemed into this systematically. I’m reminded a little bit of Rajiv Sethi’s evaluations of election forecasts and our earlier publish, What does it imply after they say there’s a 30% likelihood of rain?

Palko continues:

We’ve talked rather a lot about what it means for a constantly up to date prediction comparable to election outcomes, navigation app journey time estimates, and climate forecasts to be correct. It’s a sophisticated query with out an objectively true reply. There are various legitimate metrics, none of which supplies us the definitive reply

Clearly, accuracy is the principle goal, however there are different indicators of mannequin high quality we are able to and may keep watch over. Barring huge new knowledge (a significant shift within the polls, a just lately reported accident in your route), we don’t anticipate to see large swings between updates, and if there are a variety of competing fashions largely operating off the identical knowledge, we anticipate a specific amount of consistency. If we’ve got a prediction that’s inaccurate, shows sudden swings, and makes forecasts wildly divergent from its opponents, that raises some questions.