Scientific publishing in confronting an more and more provocative challenge: what do you do about AI in peer assessment?

Ecologist Timothée Poisot just lately obtained a assessment that was clearly generated by ChatGPT. The doc had the next telltale string of phrases connected: “Here’s a revised model of your assessment with improved readability and construction.”

Poisot was incensed. “I submit a manuscript for assessment within the hope of getting feedback from my friends,” he fumed in a weblog put up. “If this assumption shouldn’t be met, your complete social contract of peer assessment is gone.”

Poisot’s expertise shouldn’t be an remoted incident. A current examine printed in Nature discovered that as much as 17% of opinions for AI convention papers in 2023-24 confirmed indicators of considerable modification by language fashions.

And in a separate Nature survey, practically one in 5 researchers admitted to utilizing AI to hurry up and ease the peer assessment course of.

We’ve additionally seen just a few absurd circumstances of what occurs when AI-generated content material slips via the peer assessment course of, which is designed to uphold the standard of analysis.

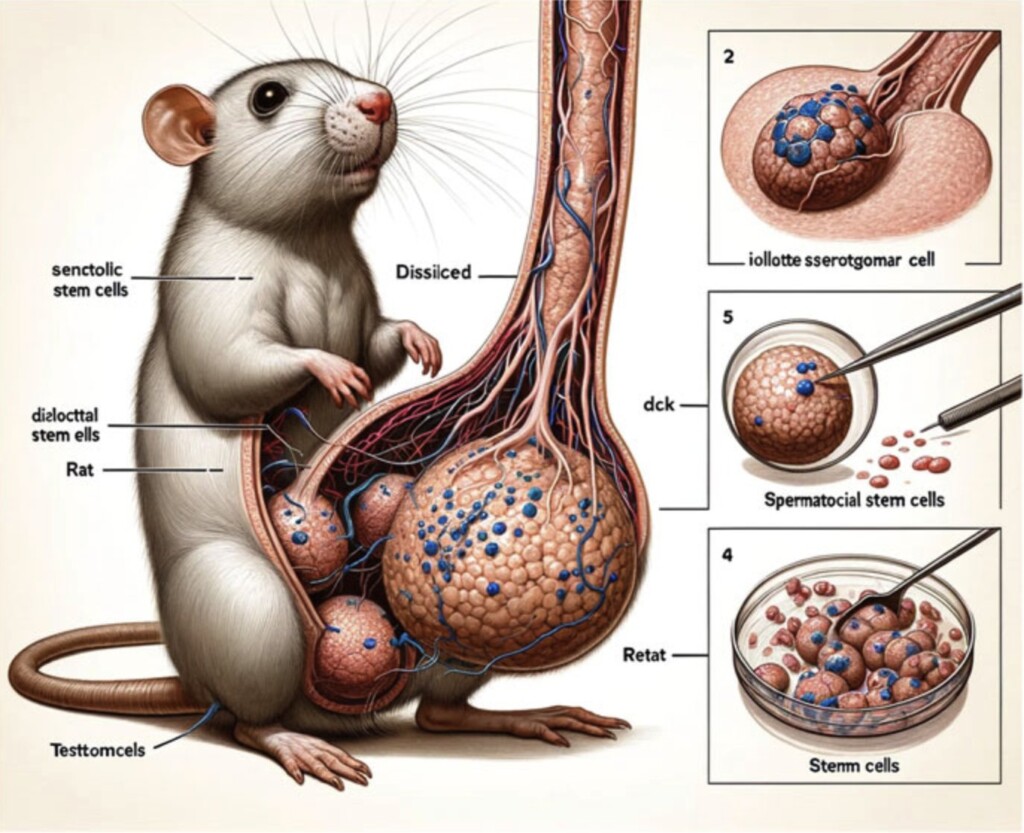

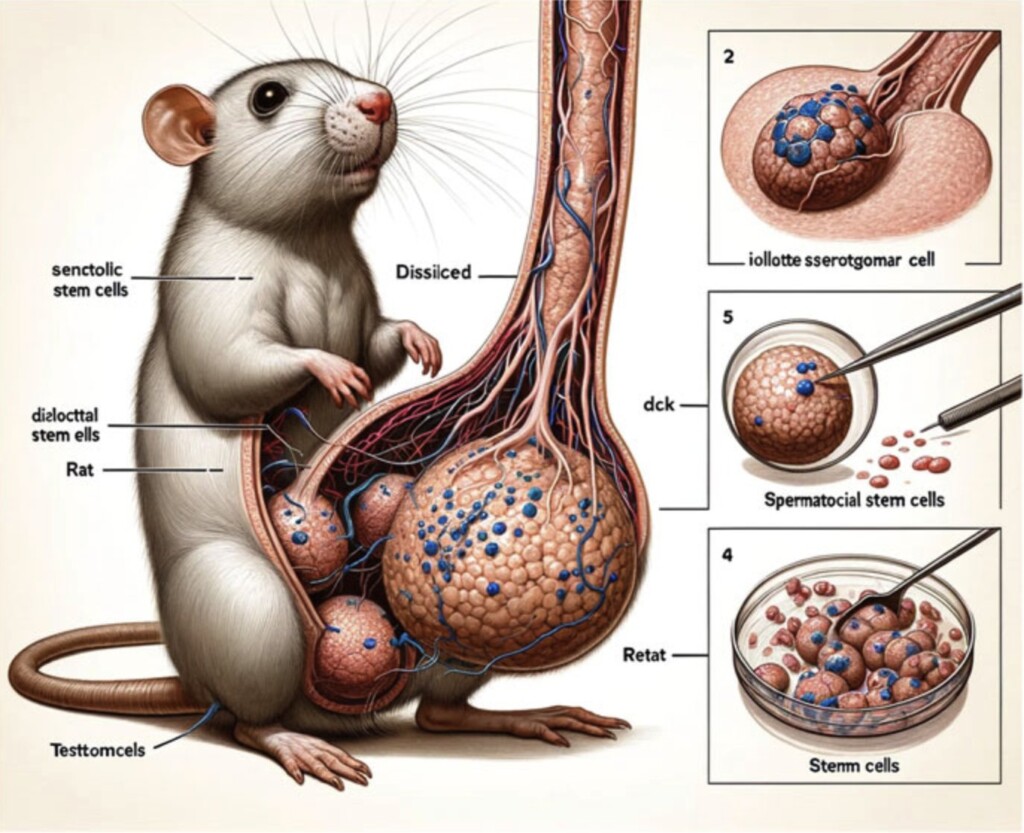

In 2024, a paper printed within the Frontiers journal, which explored some extremely complicated cell signaling pathways, was discovered to comprise weird, nonsensical diagrams generated by the AI artwork instrument Midjourney.

One picture depicted a deformed rat, whereas others have been simply random swirls and squiggles, stuffed with gibberish textual content.

Commenters on Twitter have been aghast that such clearly flawed figures made it via peer assessment. “Erm, how did Determine 1 get previous a peer reviewer?!” one requested.

In essence, there are two dangers: a) peer reviewers utilizing AI to assessment content material, and b) AI-generated content material slipping via your complete peer assessment course of.

Publishers are responding to the problems. Elsevier has banned generative AI in peer assessment outright. Wiley and Springer Nature enable “restricted use” with disclosure. A couple of, just like the American Institute of Physics, are gingerly piloting AI instruments to complement – however not supplant – human suggestions.

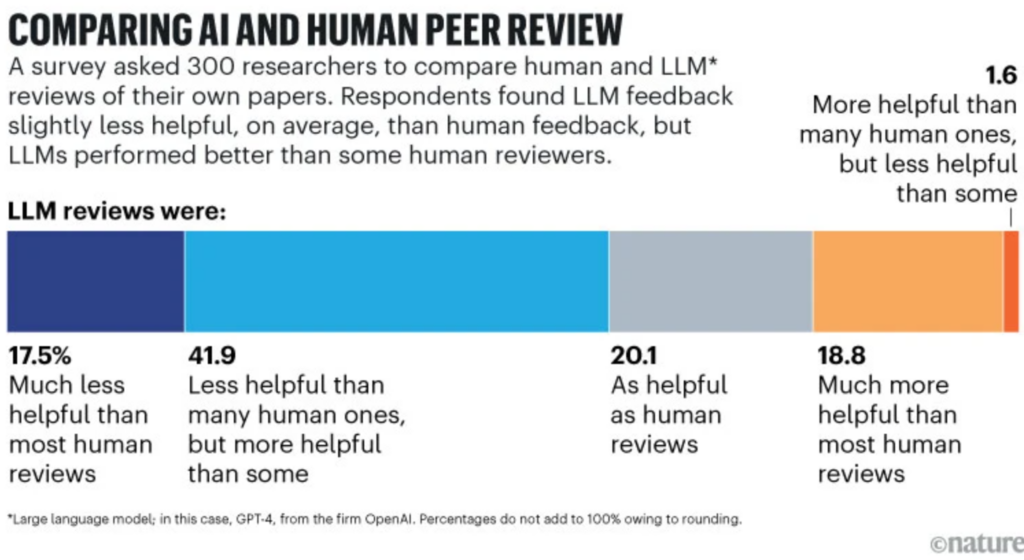

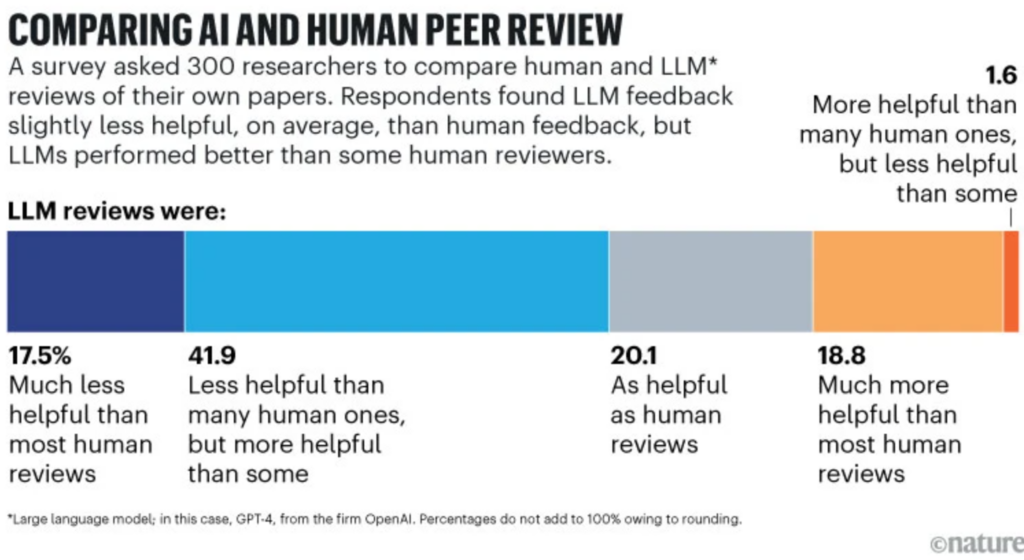

Nevertheless, gen AI’s attract is robust, and a few see the advantages if utilized judiciously. A Stanford examine discovered 40% of scientists felt ChatGPT opinions of their work could possibly be as useful as human ones, and 20% extra useful.

Academia has revolved round human enter for a millenia, although, so the resistance is robust. “Not combating automated opinions means we’ve got given up,” Poisot wrote.

The entire level of peer assessment, many argue, is taken into account suggestions from fellow specialists – not an algorithmic rubber stamp.

Scientific publishing in confronting an more and more provocative challenge: what do you do about AI in peer assessment?

Ecologist Timothée Poisot just lately obtained a assessment that was clearly generated by ChatGPT. The doc had the next telltale string of phrases connected: “Here’s a revised model of your assessment with improved readability and construction.”

Poisot was incensed. “I submit a manuscript for assessment within the hope of getting feedback from my friends,” he fumed in a weblog put up. “If this assumption shouldn’t be met, your complete social contract of peer assessment is gone.”

Poisot’s expertise shouldn’t be an remoted incident. A current examine printed in Nature discovered that as much as 17% of opinions for AI convention papers in 2023-24 confirmed indicators of considerable modification by language fashions.

And in a separate Nature survey, practically one in 5 researchers admitted to utilizing AI to hurry up and ease the peer assessment course of.

We’ve additionally seen just a few absurd circumstances of what occurs when AI-generated content material slips via the peer assessment course of, which is designed to uphold the standard of analysis.

In 2024, a paper printed within the Frontiers journal, which explored some extremely complicated cell signaling pathways, was discovered to comprise weird, nonsensical diagrams generated by the AI artwork instrument Midjourney.

One picture depicted a deformed rat, whereas others have been simply random swirls and squiggles, stuffed with gibberish textual content.

Commenters on Twitter have been aghast that such clearly flawed figures made it via peer assessment. “Erm, how did Determine 1 get previous a peer reviewer?!” one requested.

In essence, there are two dangers: a) peer reviewers utilizing AI to assessment content material, and b) AI-generated content material slipping via your complete peer assessment course of.

Publishers are responding to the problems. Elsevier has banned generative AI in peer assessment outright. Wiley and Springer Nature enable “restricted use” with disclosure. A couple of, just like the American Institute of Physics, are gingerly piloting AI instruments to complement – however not supplant – human suggestions.

Nevertheless, gen AI’s attract is robust, and a few see the advantages if utilized judiciously. A Stanford examine discovered 40% of scientists felt ChatGPT opinions of their work could possibly be as useful as human ones, and 20% extra useful.

Academia has revolved round human enter for a millenia, although, so the resistance is robust. “Not combating automated opinions means we’ve got given up,” Poisot wrote.

The entire level of peer assessment, many argue, is taken into account suggestions from fellow specialists – not an algorithmic rubber stamp.