NVIDIA mentioned it has achieved a document giant language mannequin (LLM) inference velocity, asserting that an NVIDIA DGX B200 node with eight NVIDIA Blackwell GPUs achieved greater than 1,000 tokens per second (TPS) per consumer on the 400-billion-parameter Llama 4 Maverick mannequin.

NVIDIA mentioned the mannequin is the biggest and strongest within the Llama 4 assortment and that the velocity was independently measured by the AI benchmarking service Synthetic Evaluation.

NVIDIA added that Blackwell reaches 72,000 TPS/server at their highest throughput configuration.

The corporate mentioned it made software program optimizations utilizing TensorRT-LLM and educated a speculative decoding draft mannequin utilizing EAGLE-3 strategies. Combining these approaches, NVIDIA has achieved a 4x speed-up relative to one of the best prior Blackwell baseline, NVIDIA mentioned.

“The optimizations described under considerably improve efficiency whereas preserving response accuracy,” NVIDIA mentioned in a weblog posted yesterday. “We leveraged FP8 knowledge varieties for GEMMs, Combination of Specialists (MoE), and Consideration operations to cut back the mannequin measurement and make use of the excessive FP8 throughput doable with Blackwell Tensor Core expertise. Accuracy when utilizing the FP8 knowledge format matches that of Synthetic Evaluation BF16 throughout many metrics….”Most generative AI software contexts require a stability of throughput and latency, guaranteeing that many purchasers can concurrently take pleasure in a “ok” expertise. Nonetheless, for essential functions that should make essential choices at velocity, minimizing latency for a single shopper turns into paramount. Because the TPS/consumer document exhibits, Blackwell {hardware} is the only option for any activity—whether or not you should maximize throughput, stability throughput and latency, or decrease latency for a single consumer (the main target of this submit).

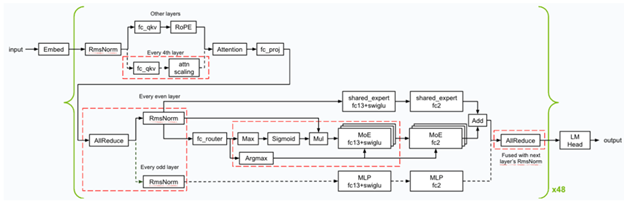

Beneath is an summary of the kernel optimizations and fusions (denoted in red-dashed squares) NVIDIA utilized through the inference. NVIDIA applied a number of low-latency GEMM kernels, and utilized numerous kernel fusions (like FC13 + SwiGLU, FC_QKV + attn_scaling and AllReduce + RMSnorm) to ensure Blackwell excels on the minimal latency state of affairs.

Overview of the kernel optimizations & fusions used for Llama 4 Maverick

NVIDIA optimized the CUDA kernels for GEMMs, MoE, and Consideration operations to attain one of the best efficiency on the Blackwell GPUs.

- Utilized spatial partitioning (also referred to as warp specialization) and designed the GEMM kernels to load knowledge from reminiscence in an environment friendly method to maximise utilization of the large reminiscence bandwidth that the NVIDIA DGX system presents—64TB/s HBM3e bandwidth in complete.

- Shuffled the GEMM weight in a swizzled format to permit higher format when loading the computation consequence from Tensor Reminiscence after the matrix multiplication computations utilizing Blackwell’s fifth-generation Tensor Cores.

- Optimized the efficiency of the eye kernels by dividing the computations alongside the sequence size dimension of the Ok and V tensors, permitting computations to run in parallel throughout a number of CUDA thread blocks. As well as, NVIDIA utilized distributed shared reminiscence to effectively scale back outcomes throughout the thread blocks in the identical thread block cluster with out the necessity to entry the worldwide reminiscence.

The rest of the weblog may be discovered right here.