Ray has emerged as a strong framework for distributed computing in AI and ML workloads, enabling researchers and practitioners to scale their purposes from laptops to clusters with minimal code modifications. This information gives an in-depth exploration of Ray’s structure, capabilities, and purposes in fashionable machine studying workflows, full with a sensible venture implementation.

Studying Goals

- Perceive Ray’s structure and its function in distributed computing for AI/ML.

- Leverage Ray’s ecosystem (Practice, Tune, Serve, Knowledge) for end-to-end ML workflows.

- Evaluate Ray with different distributed computing frameworks.

- Design distributed coaching pipelines for giant language fashions.

- Optimize useful resource allocation and debug distributed purposes.

This text was revealed as part of the Knowledge Science Blogathon.

Introduction to Ray and Distributed Computing

Ray is an open-source unified framework for scaling AI and Python purposes, offering a easy, common API for constructing distributed purposes that may scale from a laptop computer to a cluster. Developed initially at UC Berkeley’s RISELab and now maintained by Anyscale, Ray has gained important traction within the AI neighborhood, changing into the spine for coaching and deploying a number of the most superior AI fashions right this moment.

The rising significance of distributed computing in AI stems from a number of components:

- Rising mannequin sizes: Fashionable AI fashions, particularly giant language fashions (LLMs), have grown exponentially in measurement, with billions and even trillions of parameters.

- Increasing datasets: Coaching knowledge continues to develop in quantity, usually exceeding what may be processed on a single machine.

- Computational calls for: Advanced algorithms and coaching procedures require extra computational sources than particular person machines can present.

- Deployment challenges: Serving fashions at scale requires distributed infrastructure to deal with various workloads effectively.

Conventional distributed computing frameworks usually require important rewrites of current code, presenting a steep studying curve. Ray differentiates itself by providing a easy, intuitive API that makes transitioning from single-machine to multi-machine computation easy, usually requiring just a few decorator modifications to current Python code.

Problem of Scaling Python Functions

Python has grow to be the lingua franca of knowledge science and machine studying, however it wasn’t designed with distributed computing in thoughts. When practitioners have to scale their Python purposes, they historically face a number of challenges:

- Low-level distribution considerations: Managing employee processes, load balancing, and fault tolerance.

- Knowledge motion: Effectively transferring knowledge between machines.

- Useful resource administration: Allocating and monitoring CPU, GPU, and reminiscence sources throughout a cluster.

- Code complexity: Rewriting algorithms to work in a distributed style.

It addresses these challenges by offering a unified framework that abstracts away a lot of the complexity whereas nonetheless permitting fine-grained management when wanted.

Ray Framework

Ray Framework structure is structured into three main elements:

- Ray AI Libraries: This assortment of Python-based, domain-specific libraries gives machine studying engineers, knowledge scientists, and researchers with a scalable toolkit tailor-made for numerous ML purposes.

- Ray Core: Serving as the inspiration, Ray Core is a general-purpose distributed computing library that empowers Python builders to parallelize and scale purposes, thereby enhancing machine studying workloads.

- Ray Clusters: Comprising a number of employee nodes linked to a central head node, Ray Clusters may be configured with a hard and fast measurement or set to dynamically alter sources primarily based on the calls for of the operating purposes.

This modular design allows customers to effectively construct and handle distributed purposes with out requiring in-depth experience in distributed techniques.

Getting Began with Ray

Earlier than diving into the superior purposes, it’s important to arrange your Ray atmosphere and perceive the fundamentals of getting began.

Ray may be put in utilizing pip. To put in the newest secure model, run:

# For machine studying purposes

pip set up -U "ray[data,train,tune,serve]"

## For reinforcement studying help, set up RLlib as a substitute.

## pip set up -U "ray[rllib]"

# For normal Python purposes

pip set up -U "ray[default]"

## If you don't need Ray Dashboard or Cluster Launcher, set up Ray with minimal dependencies as a substitute.

## pip set up -U "ray"

Ray’s Programming Mannequin: Duties and Actors

Ray’s programming mannequin revolves round two main abstractions:

- Duties: Capabilities that execute remotely and asynchronously. Duties are stateless computations that may be scheduled on any employee within the cluster.

- Actors: Lessons that keep state and execute strategies remotely. Actors encapsulate state and supply an object-oriented method to distributed computing.

These abstractions permit builders to precise several types of parallelism naturally:

import ray

# Initialize Ray

ray.init()

# Outline a distant activity

@ray.distant

def process_data(data_chunk):

# Course of knowledge and return outcomes

return processed_result

# Outline an actor class

@ray.distant

class Counter:

def __init__(self):

self.depend = 0

def increment(self):

self.depend += 1

return self.depend

def get_count(self):

return self.depend

# Execute duties in parallel

data_chunks = [data_1, data_2, data_3, data_4]

result_refs = [process_data.remote(chunk) for chunk in data_chunks]

outcomes = ray.get(result_refs) # Anticipate all duties to finish

# Create an actor occasion

counter = Counter.distant()

counter.increment.distant() # Execute technique on the actor

depend = ray.get(counter.get_count.distant()) # Get the actor's stateRay’s programming mannequin makes it straightforward to rework sequential Python code into distributed purposes with minimal modifications. Duties are perfect for stateless, embarrassingly parallel workloads, whereas actors are good for sustaining state or implementing providers.

Ray Cluster Structure

A Ray cluster consists of a number of key elements:

- Head Node: The central coordination level for the cluster, internet hosting the World Management Retailer (GCS) which maintains cluster metadata.

- Employee Nodes: Processes that execute duties and host actors. Every employee runs on a separate CPU or GPU core.

- Driver Course of: The method operating the person’s program, answerable for submitting duties to the cluster.

- Object Retailer: A distributed, shared-memory object retailer for environment friendly knowledge sharing between duties and actors.

- Scheduler: Liable for assigning duties to staff primarily based on useful resource availability and constraints.

- Useful resource Administration: Ray’s system for allocating and monitoring CPU, GPU, and customized sources throughout the cluster.

Establishing a Ray cluster may be accomplished in a number of methods:

- Domestically on a single machine

- On a non-public cluster utilizing Ray’s cluster launcher

- On cloud suppliers like AWS, GCP, or Azure

- Utilizing managed providers like Anyscale

# Beginning Ray on a single machine (head node)

ray begin --head --port=6379

# Becoming a member of a employee node to the cluster

ray begin --address=:6379 Ray Object Retailer and Reminiscence Administration

Ray features a distributed object retailer that allows environment friendly sharing of objects between duties and actors. Objects within the retailer are immutable and may be accessed by any employee within the cluster.

import ray

import numpy as np

ray.init()

# Retailer an object within the object retailer

knowledge = np.random.rand(1000, 1000)

data_ref = ray.put(knowledge) # Returns a reference to the article

# Cross the reference to a distant activity

@ray.distant

def process_matrix(matrix_ref):

# The matrix is retrieved from the article retailer

matrix = ray.get(matrix_ref)

return np.sum(matrix)

result_ref = process_matrix.distant(data_ref)

consequence = ray.get(result_ref)The item retailer optimizes knowledge switch by:

- Avoiding pointless knowledge copying: Objects are shared by reference when attainable.

- Spilling to disk: Mechanically transferring objects to disk when reminiscence is proscribed.

- Distributed references: Monitoring object references throughout the cluster.

Ray for AI and ML Workloads

The Ray gives a complete ecosystem of libraries particularly designed for various facets of AI and ML workflows:

Ray Practice for Distributed Mannequin Coaching utilizing PyTorch

Ray Practice simplifies distributed deep studying with a unified API throughout completely different frameworks

For reference, the ultimate code will look one thing like the next:

import os

import tempfile

import torch

from torch.nn import CrossEntropyLoss

from torch.optim import Adam

from torch.utils.knowledge import DataLoader

from torchvision.fashions import resnet18

from torchvision.datasets import FashionMNIST

from torchvision.transforms import ToTensor, Normalize, Compose

import ray.practice.torch

def train_func():

# Mannequin, Loss, Optimizer

mannequin = resnet18(num_classes=10)

mannequin.conv1 = torch.nn.Conv2d(

1, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False

)

# [1] Put together mannequin.

mannequin = ray.practice.torch.prepare_model(mannequin)

# mannequin.to("cuda") # That is accomplished by `prepare_model`

criterion = CrossEntropyLoss()

optimizer = Adam(mannequin.parameters(), lr=0.001)

# Knowledge

remodel = Compose([ToTensor(), Normalize((0.28604,), (0.32025,))])

data_dir = os.path.be a part of(tempfile.gettempdir(), "knowledge")

train_data = FashionMNIST(root=data_dir, practice=True, obtain=True, remodel=remodel)

train_loader = DataLoader(train_data, batch_size=128, shuffle=True)

# [2] Put together dataloader.

train_loader = ray.practice.torch.prepare_data_loader(train_loader)

# Coaching

for epoch in vary(10):

if ray.practice.get_context().get_world_size() > 1:

train_loader.sampler.set_epoch(epoch)

for photos, labels in train_loader:

# That is accomplished by `prepare_data_loader`!

# photos, labels = photos.to("cuda"), labels.to("cuda")

outputs = mannequin(photos)

loss = criterion(outputs, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# [3] Report metrics and checkpoint.

metrics = {"loss": loss.merchandise(), "epoch": epoch}

with tempfile.TemporaryDirectory() as temp_checkpoint_dir:

torch.save(

mannequin.module.state_dict(),

os.path.be a part of(temp_checkpoint_dir, "mannequin.pt")

)

ray.practice.report(

metrics,

checkpoint=ray.practice.Checkpoint.from_directory(temp_checkpoint_dir),

)

if ray.practice.get_context().get_world_rank() == 0:

print(metrics)

# [4] Configure scaling and useful resource necessities.

scaling_config = ray.practice.ScalingConfig(num_workers=2, use_gpu=True)

# [5] Launch distributed coaching job.

coach = ray.practice.torch.TorchTrainer(

train_func,

scaling_config=scaling_config,

# [5a] If operating in a multi-node cluster, that is the place you

# ought to configure the run's persistent storage that's accessible

# throughout all employee nodes.

# run_config=ray.practice.RunConfig(storage_path="s3://..."),

)

consequence = coach.match()

# [6] Load the educated mannequin.

with consequence.checkpoint.as_directory() as checkpoint_dir:

model_state_dict = torch.load(os.path.be a part of(checkpoint_dir, "mannequin.pt"))

mannequin = resnet18(num_classes=10)

mannequin.conv1 = torch.nn.Conv2d(

1, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False

)

mannequin.load_state_dict(model_state_dict)Ray Practice gives:

- Multi-node and multi-GPU coaching capabilities

- Help for fashionable frameworks (PyTorch, TensorFlow, Horovod)

- Checkpointing and fault tolerance

- Integration with hyperparameter tuning

Ray Tune for Hyperparameter Optimization

Hyperparameter tuning is essential for AI and ML mannequin efficiency. Ray Tune gives scalable hyperparameter optimization.

To run, set up the next:

pip set up "ray[tune]"from ray import tune

from ray.tune.schedulers import ASHAScheduler

# Outline the target operate to optimize

def goal(config):

mannequin = build_model(config)

for epoch in vary(100):

# Practice the mannequin

loss = train_epoch(mannequin)

tune.report(loss=loss) # Report metrics to Tune

# Configure the search area

search_space = {

"learning_rate": tune.loguniform(1e-4, 1e-1),

"batch_size": tune.alternative([16, 32, 64, 128]),

"hidden_layers": tune.randint(1, 5)

}

# Run hyperparameter optimization

evaluation = tune.run(

goal,

config=search_space,

scheduler=ASHAScheduler(metric="loss", mode="min"),

num_samples=100

)

# Get one of the best configuration

best_config = evaluation.get_best_config(metric="loss", mode="min")Ray Tune affords:

- Varied search algorithms (grid search, random search, Bayesian optimization)

- Adaptive useful resource allocation

- Early stopping for inefficient trials

- Integration with ML frameworks

Ray Serve for Mannequin Deployment

It’s designed for deploying ML fashions at scale:

Set up Ray Serve and its dependencies:

#import csvimport ray

from ray import serve

from starlette.requests import Request

import torch

import json

# Begin Ray Serve

serve.begin()

# Outline a deployment for our mannequin

@serve.deployment(route_prefix="/predict", num_replicas=2)

class ModelDeployment:

def __init__(self, model_path):

self.mannequin = torch.load(model_path)

self.mannequin.eval()

async def __call__(self, request: Request):

knowledge = await request.json()

input_tensor = torch.tensor(knowledge["input"])

with torch.no_grad():

prediction = self.mannequin(input_tensor).tolist()

return {"prediction": prediction}

# Deploy the mannequin

model_deployment = ModelDeployment.deploy("./trained_model.pt")The Ray Serve allows:

- Mannequin composition and microservices

- Horizontal scaling

- Visitors splitting and A/B testing

- Batching for efficiency optimization

Ray Knowledge for ML-Optimized Knowledge Processing

Ray Knowledge gives distributed knowledge processing capabilities optimized for ML workloads:

import ray

# Initialize Ray

ray.init()

# Create a dataset from a file or knowledge supply

ds = ray.knowledge.read_csv("s3://bucket/path/to/knowledge.csv")

# Apply transformations in parallel

def preprocess_batch(batch):

# Apply preprocessing to the batch

return processed_batch

transformed_ds = ds.map_batches(preprocess_batch)

# Cut up for coaching and validation

train_ds, val_ds = transformed_ds.train_test_split(test_size=0.2)

# Create a loader for ML framework (e.g., PyTorch)

train_loader = train_ds.to_torch(batch_size=32, shuffle=True)Knowledge affords:

- Parallel knowledge loading and transformation

- Integration with ML coaching

- Help for numerous knowledge codecs and sources

- Optimized for ML workflows

Distributed Nice-tuning of a Massive Language Mannequin with Ray

Let’s implement a whole venture that demonstrates methods to use Ray for fine-tuning a giant language mannequin (LLM) utilizing distributed computing sources. We’ll use GPT-J-6B as our base mannequin and Ray Practice with DeepSpeed for environment friendly distributed coaching.

On this venture, we’ll:

- Arrange a Ray cluster for distributed coaching

- Put together a dataset for fine-tuning the LLM

- Configure DeepSpeed for memory-efficient coaching

- Implement distributed coaching utilizing Ray Practice

- Consider the mannequin and deploy it with Ray Serve

Atmosphere Setup

First, let’s arrange our surroundings with the required dependencies:

# Set up required packages

!pip set up "ray[train]" transformers datasets speed up deepspeed torch considerRay Cluster Configuration

For this venture, we’ll configure a Ray cluster with a number of GPUs:

import ray

import os

# Configuration

model_name = "EleutherAI/gpt-j-6B" # We'll use GPT-J-6B as our base mannequin

use_gpu = True

num_workers = 16 # Variety of coaching staff (alter primarily based on out there GPUs)

cpus_per_worker = 8 # CPUs per employee

# Initialize Ray

ray.init(

runtime_env={

"pip": [

"transformers==4.26.0",

"accelerate==0.18.0",

"datasets",

"evaluate",

"deepspeed==0.12.3",

"torch>=1.12.0"

]

}

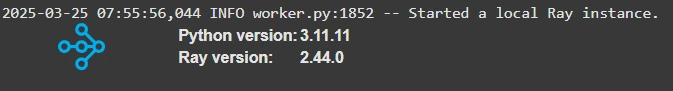

)This initialization creates a neighborhood Ray cluster. In a manufacturing atmosphere, you would possibly hook up with an current Ray cluster as a substitute.

Knowledge Preparation

For fine-tuning our language mannequin, we’ll put together a textual content dataset:

from datasets import load_dataset

from transformers import AutoTokenizer

# Load tokenizer for our mannequin

tokenizer = AutoTokenizer.from_pretrained(model_name)

tokenizer.pad_token = tokenizer.eos_token # GPT fashions haven't got a pad token by default

# Load a textual content dataset (instance utilizing a subset of wikitext)

dataset = load_dataset("wikitext", "wikitext-2-raw-v1")

# Outline preprocessing operate for tokenization

def preprocess_function(examples):

return tokenizer(

examples["text"],

truncation=True,

max_length=512,

padding="max_length",

return_tensors="pt"

)

# Tokenize the dataset in parallel utilizing Ray Knowledge

import ray.knowledge

ray_dataset = ray.knowledge.from_huggingface(dataset)

tokenized_dataset = ray_dataset.map_batches(

preprocess_function,

batch_format="pandas",

batch_size=100

)

# Convert again to Hugging Face dataset format

train_dataset = tokenized_dataset.practice.to_huggingface()

eval_dataset = tokenized_dataset.validation.to_huggingface()DeepSpeed Configuration for Reminiscence-Environment friendly Coaching

Coaching giant fashions like GPT-J-6B requires reminiscence optimization strategies. DeepSpeed is a deep studying optimization library that allows environment friendly coaching.

Let’s configure it for our distributed coaching:

# DeepSpeed configuration

deepspeed_config = {

"fp16": {

"enabled": True

},

"zero_optimization": {

"stage": 2,

"offload_optimizer": {

"gadget": "cpu"

},

"allgather_bucket_size": 5e8,

"reduce_bucket_size": 5e8

},

"train_batch_size": "auto",

"train_micro_batch_size_per_gpu": 4,

"gradient_accumulation_steps": "auto",

"optimizer": {

"kind": "AdamW",

"params": {

"lr": 5e-5,

"weight_decay": 0.01

}

}

}

# Save the config to a file

import json

with open("deepspeed_config.json", "w") as f:

json.dump(deepspeed_config, f)This configuration makes use of a number of optimization strategies:

- FP16 precision to cut back reminiscence utilization

- ZeRO stage 2 optimizer to partition optimizer states

- CPU offloading to maneuver some knowledge from GPU to CPU reminiscence

- Computerized batch measurement and gradient accumulation configuration

Implementing Distributed Coaching

Outline the coaching operate and use Ray Practice to distribute it throughout the cluster:

from transformers import AutoModelForCausalLM, Coach, TrainingArguments

import torch

import torch.distributed as dist

from ray.practice.huggingface import HuggingFaceTrainer

from ray.practice import ScalingConfig

# Outline the coaching operate to be executed on every employee

def train_func(config):

# Initialize course of group for distributed coaching

dist.init_process_group(backend="nccl")

# Load pre-trained mannequin

mannequin = AutoModelForCausalLM.from_pretrained(

config["model_name"],

revision="float16",

torch_dtype=torch.float16,

low_cpu_mem_usage=True

)

# Arrange coaching arguments

training_args = TrainingArguments(

output_dir="./output",

per_device_train_batch_size=config["batch_size"],

per_device_eval_batch_size=config["batch_size"],

evaluation_strategy="epoch",

num_train_epochs=config["epochs"],

fp16=True,

report_to="none",

deepspeed="deepspeed_config.json",

save_strategy="epoch",

load_best_model_at_end=True,

logging_steps=10

)

# Initialize Coach

coach = Coach(

mannequin=mannequin,

args=training_args,

train_dataset=config["train_dataset"],

eval_dataset=config["eval_dataset"],

)

# Practice the mannequin

coach.practice()

# Save the ultimate mannequin

coach.save_model("./final_model")

return {"loss": coach.state.best_metric}

# Configure the distributed coaching

scaling_config = ScalingConfig(

num_workers=num_workers,

use_gpu=use_gpu,

resources_per_worker={"CPU": cpus_per_worker, "GPU": 1}

)

# Create the Ray Practice Coach

coach = HuggingFaceTrainer(

train_func,

scaling_config=scaling_config,

train_loop_config={

"model_name": model_name,

"train_dataset": train_dataset,

"eval_dataset": eval_dataset,

"batch_size": 4,

"epochs": 3

}

)

# Begin the distributed coaching

consequence = coach.match()This code units up distributed coaching throughout a number of GPUs utilizing Ray Practice. The train_func is executed on every employee, with Ray dealing with the distribution of the workload.

Mannequin Analysis

After coaching, we’ll consider the mannequin’s efficiency:

from transformers import pipeline

# Load the fine-tuned mannequin

model_path = "./final_model"

tokenizer = AutoTokenizer.from_pretrained(model_path)

mannequin = AutoModelForCausalLM.from_pretrained(model_path)

# Create a textual content era pipeline

text_generator = pipeline("text-generation", mannequin=mannequin, tokenizer=tokenizer, gadget=0)

# Instance prompts for analysis

prompts = [

"Artificial intelligence is",

"The future of distributed computing",

"Machine learning models can"

]

# Generate textual content for every immediate

for immediate in prompts:

generated_text = text_generator(immediate, max_length=100, num_return_sequences=1)[0]["generated_text"]

print(f"Immediate: {immediate}")

print(f"Generated: {generated_text}")

print("---")

Deploying the Mannequin with Ray Serve

Lastly, we’ll deploy the fine-tuned mannequin for inference utilizing Ray Serve:

import ray

from ray import serve

from starlette.requests import Request

import json

# Begin Ray Serve

serve.begin()

# Outline a deployment for our mannequin

@serve.deployment(route_prefix="/generate", num_replicas=2, ray_actor_options={"num_gpus": 1})

class TextGenerationModel:

def __init__(self, model_path):

self.tokenizer = AutoTokenizer.from_pretrained(model_path)

self.mannequin = AutoModelForCausalLM.from_pretrained(

model_path,

torch_dtype=torch.float16,

device_map="auto"

)

self.pipeline = pipeline(

"text-generation",

mannequin=self.mannequin,

tokenizer=self.tokenizer

)

async def __call__(self, request: Request) -> dict:

knowledge = await request.json()

immediate = knowledge.get("immediate", "")

max_length = knowledge.get("max_length", 100)

generated_text = self.pipeline(

immediate,

max_length=max_length,

num_return_sequences=1

)[0]["generated_text"]

return {"generated_text": generated_text}

# Deploy the mannequin

model_deployment = TextGenerationModel.deploy("./final_model")

# Instance shopper code to question the deployed mannequin

import requests

response = requests.publish(

"http://localhost:8000/generate",

json={"immediate": "Synthetic intelligence is", "max_length": 100}

)

print(response.json())

This deployment makes use of Ray Serve to create a scalable inference service. Ray Serve handles the complexity of scaling, load balancing, and useful resource administration, permitting us to give attention to the applying logic.

Actual-World Functions and Case Research of Ray

Ray has gained important traction in numerous industries attributable to its means to scale AI/ML workloads effectively. Listed here are some notable real-world purposes and case research:

Massive-Scale AI Mannequin Coaching (OpenAI, Uber, and Meta)

- OpenAI used Ray to scale reinforcement studying for coaching AI brokers like Dota 2 bots.

- Uber’s Michelangelo leverages Ray for distributed hyperparameter tuning and mannequin coaching at scale.

- Meta (Fb) employs Ray to optimize large-scale deep studying workflows.

Monetary Companies and Fraud Detection (Ant Group, JP Morgan, and Goldman Sachs)

- Ant Group (Alibaba’s fintech arm) integrates Ray for real-time fraud detection and danger evaluation.

- JP Morgan and Goldman Sachs use Ray to speed up monetary modeling, danger evaluation, and algorithmic buying and selling methods.

Autonomous Autos and Robotics (NVIDIA, Waymo, and Tesla)

- NVIDIA makes use of Ray for reinforcement learning-based autonomous driving simulations.

- Waymo and Tesla make use of Ray to coach self-driving automobile fashions with large-scale sensor knowledge processing.

Healthcare and Drug Discovery (DeepMind, Genentech, and AstraZeneca)

- DeepMind leverages Ray for protein folding simulations and AI-driven medical analysis.

- Genentech and AstraZeneca use Ray in AI-driven drug discovery, accelerating computational biology and genomics analysis.

Massive-Scale Suggestion Programs (Netflix, TikTok, and Amazon)

- Netflix employs Ray to energy customized content material suggestions and A/B testing.

- TikTok scales suggestion fashions with Ray to enhance video recommendations in actual time.

- Amazon enhances its suggestion algorithms and e-commerce search utilizing Ray’s distributed computing capabilities.

Cloud & AI Infrastructure (Google Cloud, AWS, and Microsoft Azure)

- Google Cloud Vertex AI integrates Ray for scalable machine studying mannequin coaching.

- AWS SageMaker helps Ray for distributed hyperparameter tuning.

- Microsoft Azure makes use of Ray for optimizing AI and machine studying providers.

Ray at OpenAI: Powering Massive Language Fashions

One of the vital notable customers of Ray is OpenAI, which has leveraged the framework for coaching its giant language fashions, together with ChatGPT. In response to studies, Ray was key in enabling OpenAI to reinforce its means to coach giant fashions effectively.

Earlier than adopting Ray, OpenAI used a set of customized instruments to develop early fashions. Nevertheless, as the restrictions of this method grew to become obvious, the corporate switched to Ray. OpenAI’s president, Greg Brockman, highlighted this transition on the Ray Summit.

The important thing benefit that Ray gives for LLM coaching is the flexibility to run the identical code on each a developer’s laptop computer and a large distributed cluster. This functionality turns into more and more essential as fashions develop in measurement and complexity.

Superior Ray Options and Finest Practices

Allow us to now discover superior ray options and finest practices:

Reminiscence Administration in Distributed Functions

Environment friendly reminiscence administration is essential when working with large-scale ML workloads:

- Object Spilling: Ray mechanically spills objects to disk when reminiscence stress is excessive. Configure spilling thresholds appropriately on your workload:

ray.init(

object_store_memory=10 * 10**9, # 10 GB

_memory_monitor_refresh_ms=100, # Test reminiscence utilization each 100ms

)- Reference Administration: Explicitly delete references to giant objects when now not wanted:

# Create a big object

data_ref = ray.put(large_dataset)

# Use the reference

result_ref = process_data.distant(data_ref)

consequence = ray.get(result_ref)

# Delete the reference when accomplished

del data_ref

- Streaming Knowledge Processing: For very giant datasets, use Ray Knowledge’s streaming capabilities as a substitute of loading the whole lot into reminiscence:

import ray

dataset = ray.knowledge.read_csv("s3://bucket/large_dataset/*.csv")

# Course of the dataset in batches with out loading the whole lot

for batch in dataset.iter_batches():

# Course of every batch

process_batch(batch)

Debugging Distributed Functions

Debugging distributed purposes may be difficult. Ray gives a number of instruments to assist:

- Ray Dashboard: Offers visibility into activity execution, actor states, and useful resource utilization:

# Begin Ray with the dashboard enabled

ray.init(dashboard_host="0.0.0.0")

# Entry the dashboard at http://:8265

- Detailed Logging: Use Ray’s logging utilities to seize logs from all staff:

import ray

import logging

# Configure logging

ray.init(logging_level=logging.INFO)

@ray.distant

def task_with_logging():

logger = logging.getLogger("ray")

logger.data("This message will likely be captured in Ray's logs")

return "Job accomplished"- Exception Dealing with: Ray propagates exceptions from distant duties again to the motive force:

@ray.distant

def task_that_might_fail(x):

if x < 0:

increase ValueError("x have to be non-negative")

return x * x

# This can increase the ValueError within the driver

strive:

consequence = ray.get(task_that_might_fail.distant(-1))

besides ValueError as e:

print(f"Caught exception: {e}")

Ray vs. Different Distributed Computing Frameworks

We’ll now look in Ray vs. Different Distributed computing frameworks:

Ray vs. Dask

Each Ray and Dask are Python-native distributed computing frameworks, however they’ve completely different focuses:

- Programming Mannequin: Ray’s activity and actor mannequin gives extra flexibility in comparison with Dask’s activity graph method.

- ML/AI Focus: Ray has specialised libraries for ML (Practice, Tune, Serve), whereas Dask focuses extra on knowledge processing.

- Knowledge Processing: Dask has deeper integration with PyData ecosystem (NumPy, Pandas).

- Efficiency: Ray usually exhibits higher efficiency for fine-grained duties and dynamic workloads.

When to decide on Ray over Dask:

- For ML-specific workloads (coaching, hyperparameter tuning, mannequin serving)

- While you want the actor programming mannequin for stateful computation

- For extremely dynamic activity graphs that change throughout execution

Ray vs. Apache Spark

Ray and Apache Spark serve completely different main use circumstances:

- Language Help: Ray is Python-first, whereas Spark is JVM-based with Python bindings.

- Use Circumstances: Spark excels at batch knowledge processing, whereas Ray is designed for ML/AI workloads.

- Iteration Velocity: Ray affords quicker iteration for ML experiments than Spark.

- Programming Mannequin: Ray’s mannequin is extra versatile than Spark’s RDD/DataFrame abstractions.

When to decide on Ray over Spark:

- For Python-native ML workflows

- While you want fine-grained activity scheduling

- For interactive improvement and quick iteration cycles

- When constructing complicated purposes that blend batch and on-line processing

Ray vs. Kubernetes + Customized ML Code

Whereas Kubernetes can be utilized to orchestrate ML workloads:

- Abstraction Degree: Ray gives higher-level abstractions particular to ML/AI than Kubernetes.

- Growth Expertise: Ray affords a extra seamless improvement expertise with out requiring information of containers and YAML.

- Integration: Ray can run on Kubernetes, combining the strengths of each techniques.

When to decide on Ray over uncooked Kubernetes:

- To keep away from the complexity of container orchestration

- For a extra built-in ML improvement expertise

- While you need to give attention to algorithms moderately than infrastructure

Reference: Ray docs

Conclusion

Ray has emerged as a crucial software for scaling AI and ML workloads, from analysis prototypes to manufacturing techniques. Its intuitive programming mannequin, mixed with specialised libraries for coaching, tuning, and serving, makes it a beautiful alternative for organizations seeking to scale their AI efforts effectively. Ray gives a path to scale that doesn’t require rewriting current code or mastering complicated distributed techniques ideas.

By understanding Ray’s core ideas, libraries, and finest practices outlined on this information, builders and knowledge scientists can leverage distributed computing to deal with issues that will be infeasible on a single machine, opening up new prospects in AI and ML improvement.

Whether or not you’re coaching giant language fashions, optimizing hyperparameters, serving fashions at scale, or processing large datasets, Ray gives the instruments and abstractions to make distributed computing accessible and productive. As the sphere continues to advance, Ray is positioned to play an more and more essential function in enabling the subsequent era of AI purposes.

Key Takeaways

- Ray simplifies distributed computing for AI/ML by enabling seamless scaling from a single machine to a cluster with minimal code modifications.

- Ray’s ecosystem (Practice, Tune, Serve, Knowledge) gives end-to-end options for distributed coaching, hyperparameter tuning, mannequin serving, and knowledge processing.

- Ray’s activity and actor-based programming mannequin makes parallelization intuitive, remodeling Python purposes into scalable distributed workloads.

- It optimizes useful resource administration by means of environment friendly scheduling, reminiscence administration, and computerized scaling throughout CPU/GPU clusters.

- Actual-world AI purposes at scale, together with LLM fine-tuning, reinforcement studying, and large-scale knowledge processing.

Incessantly Requested Questions

A. Ray is an open-source framework for distributed computing, enabling Python purposes to scale throughout a number of machines with minimal code modifications. It’s broadly used for AI/ML workloads, reinforcement studying, and large-scale knowledge processing.

A. Ray abstracts the complexities of parallelization by offering a easy activity and actor-based programming mannequin. Builders can distribute workloads throughout a number of CPUs and GPUs with out managing low-level infrastructure.

A. Whereas Spark is optimized for batch knowledge processing, Ray is extra versatile, supporting dynamic, interactive, and AI/ML-specific workloads. Ray additionally has built-in help for deep studying and reinforcement studying purposes.

A. Sure, Ray helps deployment on main cloud suppliers (AWS, GCP, Azure) and integrates with Kubernetes for scalable orchestration.

A. Ray is right for distributed AI/ML mannequin coaching, hyperparameter tuning, large-scale knowledge processing, reinforcement studying, and serving AI fashions in manufacturing.

The media proven on this article isn’t owned by Analytics Vidhya and is used on the Writer’s discretion.

Login to proceed studying and revel in expert-curated content material.