Drone reveals are an more and more well-liked type of large-scale mild show. These reveals incorporate a whole bunch to 1000’s of airborne bots, every programmed to fly in paths that collectively type intricate shapes and patterns throughout the sky. Once they go as deliberate, drone reveals will be spectacular. However when a number of drones malfunction, as has occurred lately in Florida, New York, and elsewhere, they could be a severe hazard to spectators on the bottom.

Drone present accidents spotlight the challenges of sustaining security in what engineers name “multiagent programs” — programs of a number of coordinated, collaborative, and computer-programmed brokers, equivalent to robots, drones, and self-driving automobiles.

Now, a staff of MIT engineers has developed a coaching technique for multiagent programs that may assure their protected operation in crowded environments. The researchers discovered that when the strategy is used to coach a small variety of brokers, the security margins and controls realized by these brokers can routinely scale to any bigger variety of brokers, in a manner that ensures the security of the system as an entire.

In real-world demonstrations, the staff skilled a small variety of palm-sized drones to securely perform completely different goals, from concurrently switching positions midflight to touchdown on designated shifting autos on the bottom. In simulations, the researchers confirmed that the identical applications, skilled on just a few drones, may very well be copied and scaled as much as 1000’s of drones, enabling a big system of brokers to securely accomplish the identical duties.

“This may very well be an ordinary for any utility that requires a staff of brokers, equivalent to warehouse robots, search-and-rescue drones, and self-driving automobiles,” says Chuchu Fan, affiliate professor of aeronautics and astronautics at MIT. “This supplies a defend, or security filter, saying every agent can proceed with their mission, and we’ll inform you learn how to be protected.”

Fan and her colleagues report on their new technique in a research showing this month within the journal IEEE Transactions on Robotics. The research’s co-authors are MIT graduate college students Songyuan Zhang and Oswin So in addition to former MIT postdoc Kunal Garg, who’s now an assistant professor at Arizona State College.

Mall margins

When engineers design for security in any multiagent system, they usually have to think about the potential paths of each single agent with respect to each different agent within the system. This pair-wise path-planning is a time-consuming and computationally costly course of. And even then, security isn’t assured.

“In a drone present, every drone is given a particular trajectory — a set of waypoints and a set of instances — after which they primarily shut their eyes and observe the plan,” says Zhang, the research’s lead creator. “Since they solely know the place they must be and at what time, if there are surprising issues that occur, they don’t know learn how to adapt.”

The MIT staff appeared as a substitute to develop a way to coach a small variety of brokers to maneuver safely, in a manner that might effectively scale to any variety of brokers within the system. And, somewhat than plan particular paths for particular person brokers, the strategy would allow brokers to repeatedly map their security margins, or boundaries past which they could be unsafe. An agent might then take any variety of paths to perform its process, so long as it stays inside its security margins.

In some sense, the staff says the strategy is much like how people intuitively navigate their environment.

“Say you’re in a extremely crowded shopping center,” So explains. “You don’t care about anybody past the people who find themselves in your fast neighborhood, just like the 5 meters surrounding you, when it comes to getting round safely and never bumping into anybody. Our work takes the same native strategy.”

Security barrier

Of their new research, the staff presents their technique, GCBF+, which stands for “Graph Management Barrier Operate.” A barrier perform is a mathematical time period utilized in robotics that calculates a type of security barrier, or a boundary past which an agent has a excessive likelihood of being unsafe. For any given agent, this security zone can change second to second, because the agent strikes amongst different brokers which are themselves shifting inside the system.

When designers calculate barrier features for anybody agent in a multiagent system, they usually must consider the potential paths and interactions with each different agent within the system. As a substitute, the MIT staff’s technique calculates the security zones of only a handful of brokers, in a manner that’s correct sufficient to signify the dynamics of many extra brokers within the system.

“Then we will type of copy-paste this barrier perform for each single agent, after which all of the sudden we have now a graph of security zones that works for any variety of brokers within the system,” So says.

To calculate an agent’s barrier perform, the staff’s technique first takes into consideration an agent’s “sensing radius,” or how a lot of the environment an agent can observe, relying on its sensor capabilities. Simply as within the shopping center analogy, the researchers assume that the agent solely cares in regards to the brokers which are inside its sensing radius, when it comes to holding protected and avoiding collisions with these brokers.

Then, utilizing pc fashions that seize an agent’s explicit mechanical capabilities and limits, the staff simulates a “controller,” or a set of directions for a way the agent and a handful of comparable brokers ought to transfer round. They then run simulations of a number of brokers shifting alongside sure trajectories, and report whether or not and the way they collide or in any other case work together.

“As soon as we have now these trajectories, we will compute some legal guidelines that we need to decrease, like say, what number of security violations we have now within the present controller,” Zhang says. “Then we replace the controller to be safer.”

On this manner, a controller will be programmed into precise brokers, which might allow them to repeatedly map their security zone primarily based on another brokers they’ll sense of their fast environment, after which transfer inside that security zone to perform their process.

“Our controller is reactive,” Fan says. “We don’t preplan a path beforehand. Our controller is consistently taking in details about the place an agent goes, what’s its velocity, how briskly different drones are going. It’s utilizing all this data to give you a plan on the fly and it’s replanning each time. So, if the state of affairs modifications, it’s at all times in a position to adapt to remain protected.”

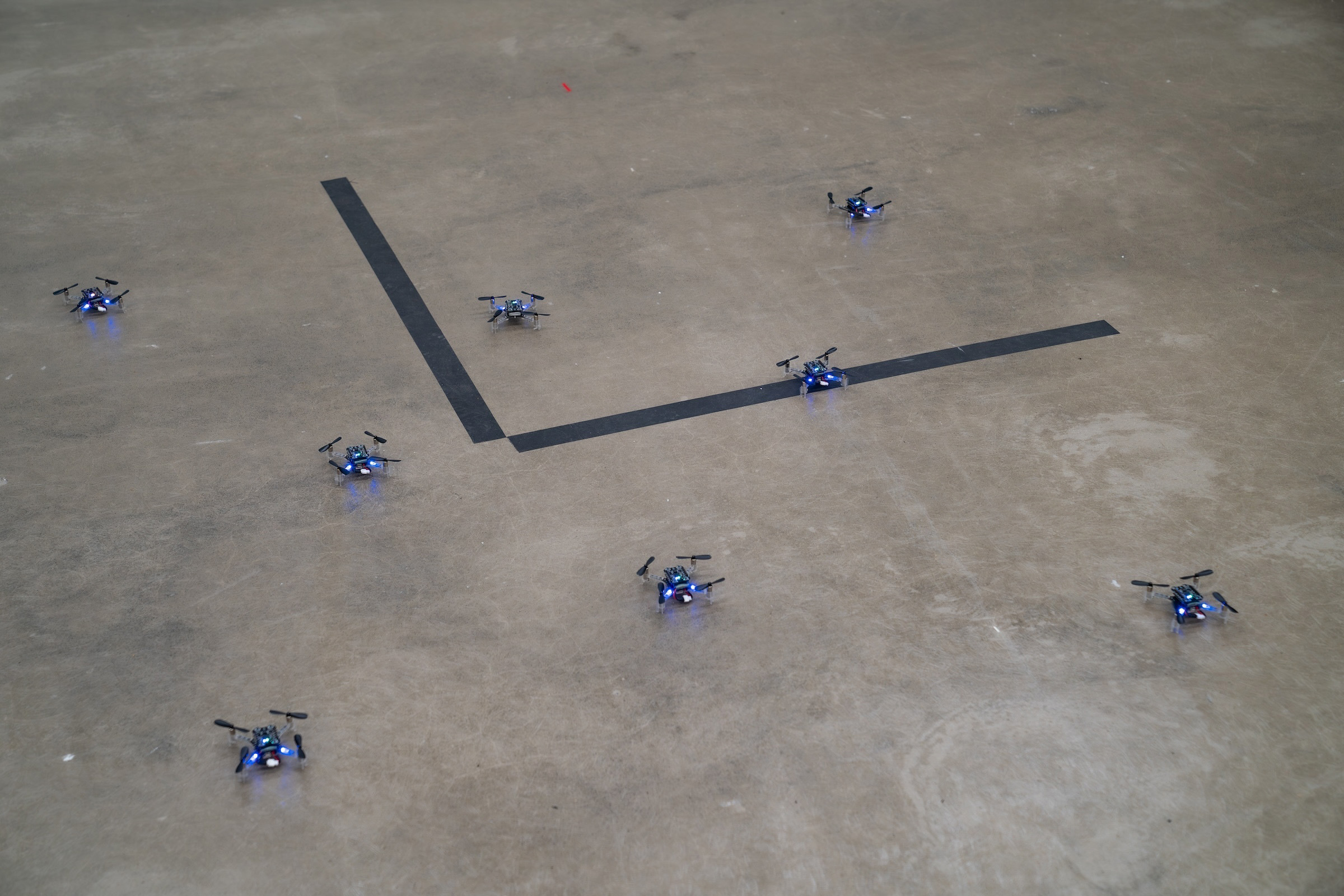

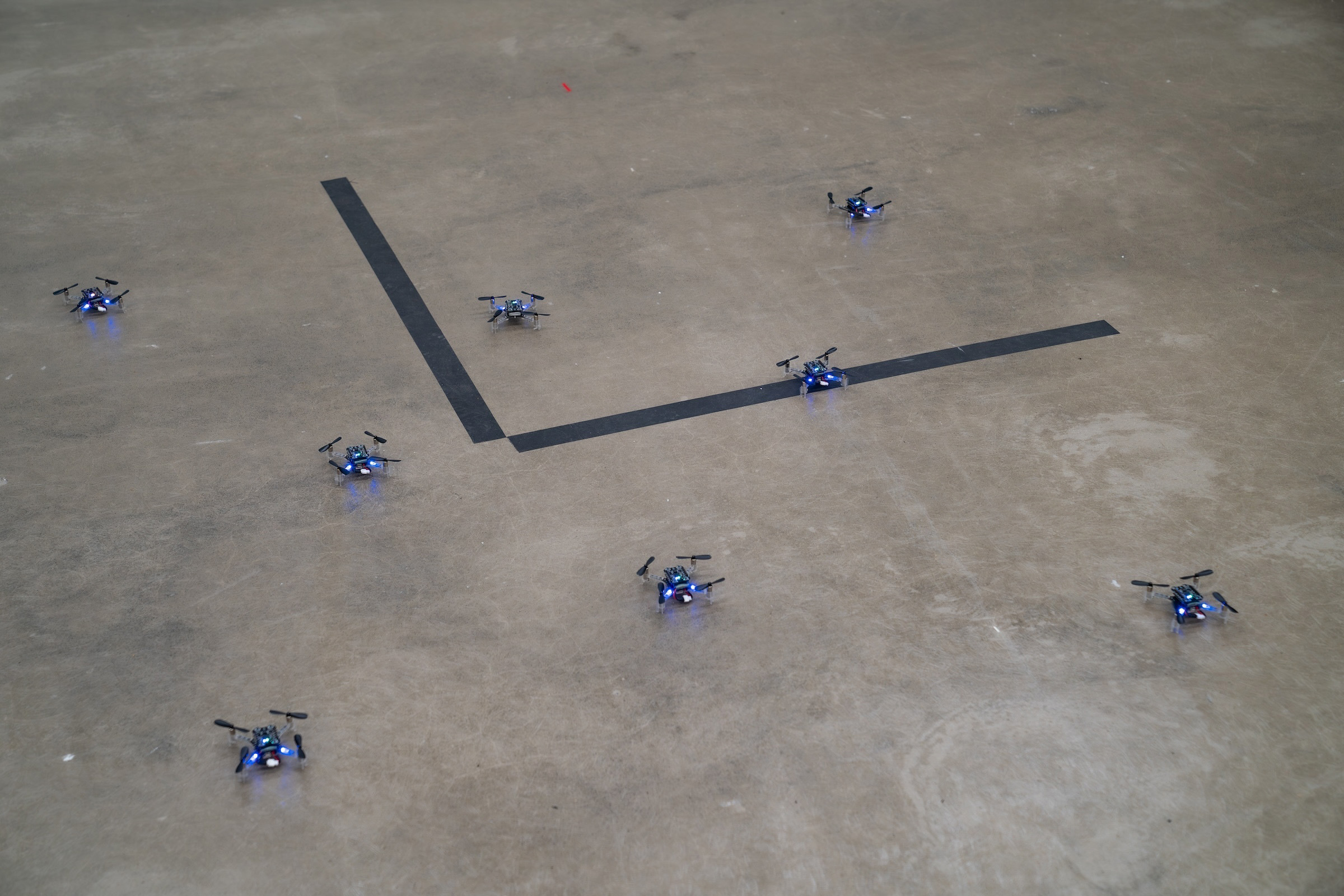

The staff demonstrated GCBF+ on a system of eight Crazyflies — light-weight, palm-sized quadrotor drones that they tasked with flying and switching positions in midair. If the drones had been to take action by taking the straightest path, they’d certainly collide. However after coaching with the staff’s technique, the drones had been in a position to make real-time changes to maneuver round one another, holding inside their respective security zones, to efficiently change positions on the fly.

In comparable vogue, the staff tasked the drones with flying round, then touchdown on particular Turtlebots — wheeled robots with shell-like tops. The Turtlebots drove repeatedly round in a big circle, and the Crazyflies had been in a position to keep away from colliding with one another as they made their landings.

“Utilizing our framework, we solely want to provide the drones their locations as a substitute of the entire collision-free trajectory, and the drones can work out learn how to arrive at their locations with out collision themselves,” says Fan, who envisions the strategy may very well be utilized to any multiagent system to ensure its security, together with collision avoidance programs in drone reveals, warehouse robots, autonomous driving autos, and drone supply programs.

This work was partly supported by the U.S. Nationwide Science Basis, MIT Lincoln Laboratory beneath the Security in Aerobatic Flight Regimes (SAFR) program, and the Defence Science and Know-how Company of Singapore.

Drone reveals are an more and more well-liked type of large-scale mild show. These reveals incorporate a whole bunch to 1000’s of airborne bots, every programmed to fly in paths that collectively type intricate shapes and patterns throughout the sky. Once they go as deliberate, drone reveals will be spectacular. However when a number of drones malfunction, as has occurred lately in Florida, New York, and elsewhere, they could be a severe hazard to spectators on the bottom.

Drone present accidents spotlight the challenges of sustaining security in what engineers name “multiagent programs” — programs of a number of coordinated, collaborative, and computer-programmed brokers, equivalent to robots, drones, and self-driving automobiles.

Now, a staff of MIT engineers has developed a coaching technique for multiagent programs that may assure their protected operation in crowded environments. The researchers discovered that when the strategy is used to coach a small variety of brokers, the security margins and controls realized by these brokers can routinely scale to any bigger variety of brokers, in a manner that ensures the security of the system as an entire.

In real-world demonstrations, the staff skilled a small variety of palm-sized drones to securely perform completely different goals, from concurrently switching positions midflight to touchdown on designated shifting autos on the bottom. In simulations, the researchers confirmed that the identical applications, skilled on just a few drones, may very well be copied and scaled as much as 1000’s of drones, enabling a big system of brokers to securely accomplish the identical duties.

“This may very well be an ordinary for any utility that requires a staff of brokers, equivalent to warehouse robots, search-and-rescue drones, and self-driving automobiles,” says Chuchu Fan, affiliate professor of aeronautics and astronautics at MIT. “This supplies a defend, or security filter, saying every agent can proceed with their mission, and we’ll inform you learn how to be protected.”

Fan and her colleagues report on their new technique in a research showing this month within the journal IEEE Transactions on Robotics. The research’s co-authors are MIT graduate college students Songyuan Zhang and Oswin So in addition to former MIT postdoc Kunal Garg, who’s now an assistant professor at Arizona State College.

Mall margins

When engineers design for security in any multiagent system, they usually have to think about the potential paths of each single agent with respect to each different agent within the system. This pair-wise path-planning is a time-consuming and computationally costly course of. And even then, security isn’t assured.

“In a drone present, every drone is given a particular trajectory — a set of waypoints and a set of instances — after which they primarily shut their eyes and observe the plan,” says Zhang, the research’s lead creator. “Since they solely know the place they must be and at what time, if there are surprising issues that occur, they don’t know learn how to adapt.”

The MIT staff appeared as a substitute to develop a way to coach a small variety of brokers to maneuver safely, in a manner that might effectively scale to any variety of brokers within the system. And, somewhat than plan particular paths for particular person brokers, the strategy would allow brokers to repeatedly map their security margins, or boundaries past which they could be unsafe. An agent might then take any variety of paths to perform its process, so long as it stays inside its security margins.

In some sense, the staff says the strategy is much like how people intuitively navigate their environment.

“Say you’re in a extremely crowded shopping center,” So explains. “You don’t care about anybody past the people who find themselves in your fast neighborhood, just like the 5 meters surrounding you, when it comes to getting round safely and never bumping into anybody. Our work takes the same native strategy.”

Security barrier

Of their new research, the staff presents their technique, GCBF+, which stands for “Graph Management Barrier Operate.” A barrier perform is a mathematical time period utilized in robotics that calculates a type of security barrier, or a boundary past which an agent has a excessive likelihood of being unsafe. For any given agent, this security zone can change second to second, because the agent strikes amongst different brokers which are themselves shifting inside the system.

When designers calculate barrier features for anybody agent in a multiagent system, they usually must consider the potential paths and interactions with each different agent within the system. As a substitute, the MIT staff’s technique calculates the security zones of only a handful of brokers, in a manner that’s correct sufficient to signify the dynamics of many extra brokers within the system.

“Then we will type of copy-paste this barrier perform for each single agent, after which all of the sudden we have now a graph of security zones that works for any variety of brokers within the system,” So says.

To calculate an agent’s barrier perform, the staff’s technique first takes into consideration an agent’s “sensing radius,” or how a lot of the environment an agent can observe, relying on its sensor capabilities. Simply as within the shopping center analogy, the researchers assume that the agent solely cares in regards to the brokers which are inside its sensing radius, when it comes to holding protected and avoiding collisions with these brokers.

Then, utilizing pc fashions that seize an agent’s explicit mechanical capabilities and limits, the staff simulates a “controller,” or a set of directions for a way the agent and a handful of comparable brokers ought to transfer round. They then run simulations of a number of brokers shifting alongside sure trajectories, and report whether or not and the way they collide or in any other case work together.

“As soon as we have now these trajectories, we will compute some legal guidelines that we need to decrease, like say, what number of security violations we have now within the present controller,” Zhang says. “Then we replace the controller to be safer.”

On this manner, a controller will be programmed into precise brokers, which might allow them to repeatedly map their security zone primarily based on another brokers they’ll sense of their fast environment, after which transfer inside that security zone to perform their process.

“Our controller is reactive,” Fan says. “We don’t preplan a path beforehand. Our controller is consistently taking in details about the place an agent goes, what’s its velocity, how briskly different drones are going. It’s utilizing all this data to give you a plan on the fly and it’s replanning each time. So, if the state of affairs modifications, it’s at all times in a position to adapt to remain protected.”

The staff demonstrated GCBF+ on a system of eight Crazyflies — light-weight, palm-sized quadrotor drones that they tasked with flying and switching positions in midair. If the drones had been to take action by taking the straightest path, they’d certainly collide. However after coaching with the staff’s technique, the drones had been in a position to make real-time changes to maneuver round one another, holding inside their respective security zones, to efficiently change positions on the fly.

In comparable vogue, the staff tasked the drones with flying round, then touchdown on particular Turtlebots — wheeled robots with shell-like tops. The Turtlebots drove repeatedly round in a big circle, and the Crazyflies had been in a position to keep away from colliding with one another as they made their landings.

“Utilizing our framework, we solely want to provide the drones their locations as a substitute of the entire collision-free trajectory, and the drones can work out learn how to arrive at their locations with out collision themselves,” says Fan, who envisions the strategy may very well be utilized to any multiagent system to ensure its security, together with collision avoidance programs in drone reveals, warehouse robots, autonomous driving autos, and drone supply programs.

This work was partly supported by the U.S. Nationwide Science Basis, MIT Lincoln Laboratory beneath the Security in Aerobatic Flight Regimes (SAFR) program, and the Defence Science and Know-how Company of Singapore.