DeepSeek-R1 is dominating tech discussions throughout Reddit, X, and developer boards, with customers calling it the “individuals’s AI” for its uncanny skill to rival paid fashions like Google Gemini and OpenAI’s GPT-4o—all whereas costing nothing.

DeepSeek-R1, a free and open-source reasoning AI, affords a privacy-first various to OpenAI’s $200/month o1 mannequin, with comparable efficiency in coding, math, and logical problem-solving. This information gives step-by-step directions for putting in DeepSeek-R1 regionally and integrating it into initiatives, probably saving a whole lot of {dollars} month-to-month.

Why DeepSeek-R1 is trending?

Not like closed fashions that lock customers into subscriptions and data-sharing agreements, DeepSeek-R1 operates completely offline when deployed regionally. Social media benchmarks present it fixing LeetCode issues 12% quicker than OpenAI’s o1 mannequin whereas utilizing simply 30% of the system assets. A TikTok demo of it coding a Python-based expense tracker in 90 seconds has racked up 2.7 million views, with feedback like “Gemini might by no means” flooding the thread. Its attraction? No API charges, no utilization caps, and no obligatory web connection.

What’s DeepSeek-R1 and the way does it examine to OpenAI-o1?

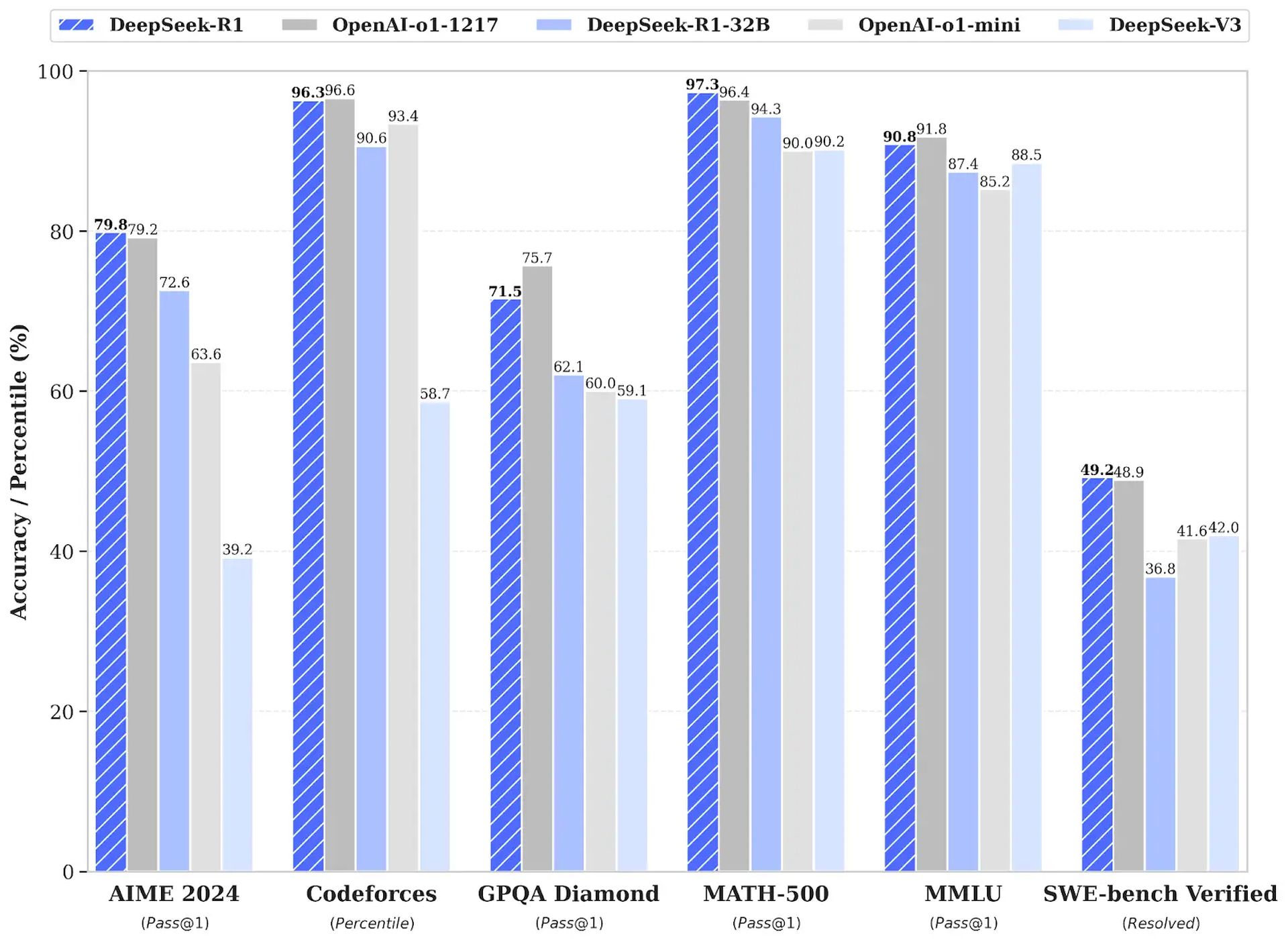

DeepSeek-R1 is a revolutionary reasoning AI that makes use of reinforcement studying (RL) as an alternative of supervised fine-tuning, attaining a 79.8% go@1 rating on the AIME 2024 math benchmark. It outperforms OpenAI-o1 in price effectivity, with API prices 96.4% cheaper ($0.55 vs. $15 per million enter tokens) and the power to run regionally on client {hardware}. DeepSeek-R1 is open-source, providing six distilled fashions starting from 1.5B to 671B parameters for various functions.

Step-by-step set up information for DeepSeek-R1 (native)

To put in DeepSeek-R1 regionally utilizing Ollama and Open Internet UI, comply with these steps:

1. Set up Ollama by way of terminal (macOS/Linux):

curl -fsSL https://ollama.com/set up.sh | sh ollama -v #test Ollama model2. Obtain a DeepSeek-R1 distilled mannequin by way of Ollama:

# Default 7B mannequin (4.7GB - splendid for client GPUs)

ollama run deepseek-r1

# Bigger 70B mannequin (requires 24GB+ VRAM)

ollama run deepseek-r1:70b

# Full DeepSeek-R1 (requires 336GB+ VRAM for 4-bit quantization)

ollama run deepseek-r1:671b3. Arrange Open Internet UI for a non-public interface:

docker run -d -p 3000:8080

--add-host=host.docker.inside:host-gateway

-v open-webui:/app/backend/knowledge

--name open-webui

--restart all the time

ghcr.io/open-webui/open-webui:foremostEntry the interface at http://localhost:3000 and choose deepseek-r1:newest. All knowledge stays in your machine, guaranteeing privateness.

The best way to combine DeepSeek-R1 into your initiatives

DeepSeek-R1 might be built-in regionally or by way of its cloud API:

1. Native deployment (privacy-first):

import openai

Hook up with your native Ollama occasion

shopper = openai.Shopper(

base_url="http://localhost:11434/v1",

api_key="ollama" # Authentication-free personal entry

)

response = shopper.chat.completions.create(

mannequin="deepseek-r1:XXb ", # change the "XX" by the distilled mannequin you select

messages=[{"role": "user", "content": "Explain blockchain security"}],

temperature=0.7 # Controls creativity vs precision

)2. Utilizing the official DeepSeek-R1 cloud API:

import openai from dotenv import load_dotenv import os

load_dotenv()

shopper = openai.OpenAI(

base_url="https://api.deepseek.com/v1",

api_key=os.getenv("DEEPSEEK_API_KEY")

)

response = shopper.chat.completions.create(

mannequin="deepseek-reasoner",

messages=[{"role": "user", "content": "Write web scraping code with error handling"}],

max_tokens=1000 # Restrict prices for lengthy responses

)DeepSeek-R1 gives an economical, privacy-focused various to OpenAI-o1, splendid for builders looking for to economize and keep knowledge safety. For additional help or to share experiences, customers are inspired to interact with the group.

Featured picture credit score: DeepSeek