Apple has killed its Apple Intelligence AI information characteristic after it fabricated tales and twisted actual headlines into fiction.

Apple’s AI information was purported to make life simpler by summing up information alerts from a number of sources. As a substitute, it created chaos by pushing out pretend information, typically beneath trusted media manufacturers.

Right here’s the place all of it went incorrect:

- Utilizing the BBC’s brand, it invented a narrative claiming tennis star Rafael Nadal had come out as homosexual, utterly misunderstanding a narrative a couple of Brazilian participant.

- It jumped the gun by saying teenage darts participant Luke Littler had gained the PDC World Championship – earlier than he’d even performed within the remaining.

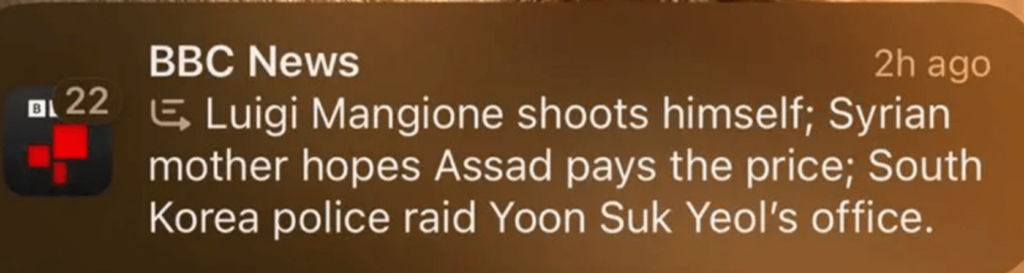

- In a extra critical blunder, it created a pretend BBC alert claiming Luigi Mangione, who’s accused of killing UnitedHealthcare CEO Brian Thompson, had killed himself.

- The system stamped The New York Instances’ identify on a utterly made-up story about Israeli Prime Minister Benjamin Netanyahu being arrested.

The BBC, angered over seeing its identify hooked up to pretend tales, ultimately filed a proper grievance. Press teams joined in, comparable to Reporters With out Borders, who warned that letting AI rewrite the information places the general public’s proper to correct info in danger.

The Nationwide Union of Journalists additionally referred to as for the characteristic to be eliminated, saying readers shouldn’t need to guess whether or not what they’re studying is actual.

Analysis has beforehand proven that even when individuals study that AI-created media is pretend, it nonetheless leaves a psychological ‘mark’ that persists afterwards.

Apple Intelligence – which supplied a variety of AI-powered options together with AI information – was one of many headline options of the brand new iPhone 16 vary.

Apple is an organization that prides itself on polished merchandise that ‘simply work’ – it’s uncommon for Apple to backtrack – so that they evidently had little alternative right here.

That mentioned, they’re not alone so far as AI blunders go. Not way back, Google’s AI-generated search summaries instructed individuals they might eat rocks and put glue on pizza.

Apple plans to resurrect the characteristic with warning labels and particular formatting to indicate when AI creates the summaries.

Ought to readers need to decode completely different fonts and labels simply to know in the event that they’re studying actual information? And right here’s a radical concept – they might simply maintain displaying the information headline itself?

All of it goes to indicate that, as AI continues to seep into each nook of our digital lives, some issues – like receiving correct information info – are just too vital to get incorrect.

An enormous U-turn from Apple, however in all probability not the final we’ll see of its kind.

Apple has killed its Apple Intelligence AI information characteristic after it fabricated tales and twisted actual headlines into fiction.

Apple’s AI information was purported to make life simpler by summing up information alerts from a number of sources. As a substitute, it created chaos by pushing out pretend information, typically beneath trusted media manufacturers.

Right here’s the place all of it went incorrect:

- Utilizing the BBC’s brand, it invented a narrative claiming tennis star Rafael Nadal had come out as homosexual, utterly misunderstanding a narrative a couple of Brazilian participant.

- It jumped the gun by saying teenage darts participant Luke Littler had gained the PDC World Championship – earlier than he’d even performed within the remaining.

- In a extra critical blunder, it created a pretend BBC alert claiming Luigi Mangione, who’s accused of killing UnitedHealthcare CEO Brian Thompson, had killed himself.

- The system stamped The New York Instances’ identify on a utterly made-up story about Israeli Prime Minister Benjamin Netanyahu being arrested.

The BBC, angered over seeing its identify hooked up to pretend tales, ultimately filed a proper grievance. Press teams joined in, comparable to Reporters With out Borders, who warned that letting AI rewrite the information places the general public’s proper to correct info in danger.

The Nationwide Union of Journalists additionally referred to as for the characteristic to be eliminated, saying readers shouldn’t need to guess whether or not what they’re studying is actual.

Analysis has beforehand proven that even when individuals study that AI-created media is pretend, it nonetheless leaves a psychological ‘mark’ that persists afterwards.

Apple Intelligence – which supplied a variety of AI-powered options together with AI information – was one of many headline options of the brand new iPhone 16 vary.

Apple is an organization that prides itself on polished merchandise that ‘simply work’ – it’s uncommon for Apple to backtrack – so that they evidently had little alternative right here.

That mentioned, they’re not alone so far as AI blunders go. Not way back, Google’s AI-generated search summaries instructed individuals they might eat rocks and put glue on pizza.

Apple plans to resurrect the characteristic with warning labels and particular formatting to indicate when AI creates the summaries.

Ought to readers need to decode completely different fonts and labels simply to know in the event that they’re studying actual information? And right here’s a radical concept – they might simply maintain displaying the information headline itself?

All of it goes to indicate that, as AI continues to seep into each nook of our digital lives, some issues – like receiving correct information info – are just too vital to get incorrect.

An enormous U-turn from Apple, however in all probability not the final we’ll see of its kind.

![Understanding LLMs Requires Extra Than Statistical Generalization [Paper Reflection]](https://cyberdefensego.com/wp-content/uploads/2025/01/blog_feature_image_045530_5_1_6_4-1-75x75.jpg)